Working with images

LuciadLightspeed 's image processing framework allows you to access images built from raster data, and manipulate the data at the pixel level. This is typically useful for enhancing an image, or to highlight certain aspects of the raster data when you display the image on screen. The image processing API offers various image manipulation operations that you can apply to raster data. The supported operations include the typical image operations required to work with:

-

Multi-band images, acquired from multi-spectral remote sensing equipment such as Earth-observing satellite sensors.

-

High Dynamic Range (HDR) images with a large bit depth

-

Low quality images that require enhancing

Using the available image processing operations, you can:

-

Determine the type of data in an image

-

Select which bands to use for visualizing a multi-spectral image

-

Apply tone mapping to images with a large dynamic range

-

Apply a convolution filter to sharpen an image

To process images in these ways, you need to use the ALcdImage objects decoded by your ILcdModelDecoder.

|

All LuciadLightspeed raster model decoders provide domain objects that either are an image, and extend from To be able to apply image operations to the data, use |

To apply image operations, you use any one of the LuciadLightspeed image operators. By chaining these operators together, you can build sophisticated image processing graphs. You can apply the processing offline on disk, or interactively to the image data visualized in a Lightspeed view.

Once you have finished manipulating your image, you can encode and store it in the GeoTIFF format. This article discusses the main LuciadLightspeed image processing API modeling and visualization concepts and operations.

Using a model decoder

To model image data, you typically use a model decoder. LuciadLightspeed offers model decoders for loading and modeling data from an external source in the most commonly used image formats. To properly represent an image in a view, the following information is required:

-

The model reference that defines the coordinate system used to locate the image data on the Earth as described in LuciadLightspeed models. When you are decoding a model, you must make sure that the coordinate reference system of the data is decoded too, as the model’s reference. For more information, see Model references.

-

The image bounds that define the coordinates of the four corners of the image in the associated reference system.

Based on these requirements you can distinguish two types of raster formats:

-

Georaster formats that specify both the reference system and the raster bounds. The model decoders for georaster formats can create the raster model based on the source data. Examples of georaster formats are DMED, DTED, DEM, and GeoTIFF.

-

Non-georaster formats that define the raster bounds but do not specify the reference system. Examples of these formats are GIF, JPEG, TIFF, and BMP. For these raster formats you need to define a model reference yourself as described in Providing reference information for raster data.

Supported data formats gives an overview of all file formats that LuciadLightspeed supports and indicates if a model decoder is available for the format or not. For more information on a specific model decoder, refer to the API reference.

Each decoded image model contains a single element that is an extension of ALcdImage.

Image domain model

ALcdImage

ALcdImage is the main class for you to use when you are working with images, and processing those images. It represents a geographically

bounded, pixel-oriented data source. You can create instances of ALcdImage using the TLcdImageBuilder class.

Each image provides metadata about the internal storage and the semantics of its pixel data via its getConfiguration() method. An image can contain measurement values such as elevations, plain RGB image data such as aerial photos, or multi-band

data such as LandSat satellite imagery. The semantics of each band are represented by the class ALcdBandSemantics, and can be queried through the getSemantics() method. The band semantics provide the following information:

-

The data type defines the type of data stored in the band. This indicates whether the data is stored as unsigned bytes, signed integers, floating point values, and so on.

-

The number of significant bits indicates how many bits are used to represent the pixel value for non-floating point data types, for example 12 of the 16 bits of a short represent the pixel value.

-

The no data value is a number that is used to indicate that the pixel contains no data.

-

Whether or not the data has been normalized and what the normalization range is.

Two implementations of ALcdBandSemantics are available:

-

ALcdBandColorSemantics: represents a color band in an image. This class allows querying the type, color space, and component index. A color band can be a gray band, a Red-Green-Blue band, or a Red-Green-Blue-Alpha channel band. -

ALcdBandMeasurementSemantics: represents a band with measurement values, for example elevation data. This class allows querying the unit of measure, anILcdISO19103UnitOfMeasure, of the band.

ALcdBasicImage

ALcdBasicImage is the most basic image representation in LuciadLightspeed. It represents a uniform two-dimensional grid of pixels.

ALcdBasicImage is an opaque handle, which by itself does not expose the underlying pixel data directly. You need an ALcdImagingEngine to access the pixel values. Images can be created using TLcdImageBuilder, or they can be the result of a chain of image operators, applied to one or more other images, as explained in Creating and applying image operators.

Information on the size and layout of the image, such as the number of pixels in each dimension, can be obtained from the

image’s Configuration.

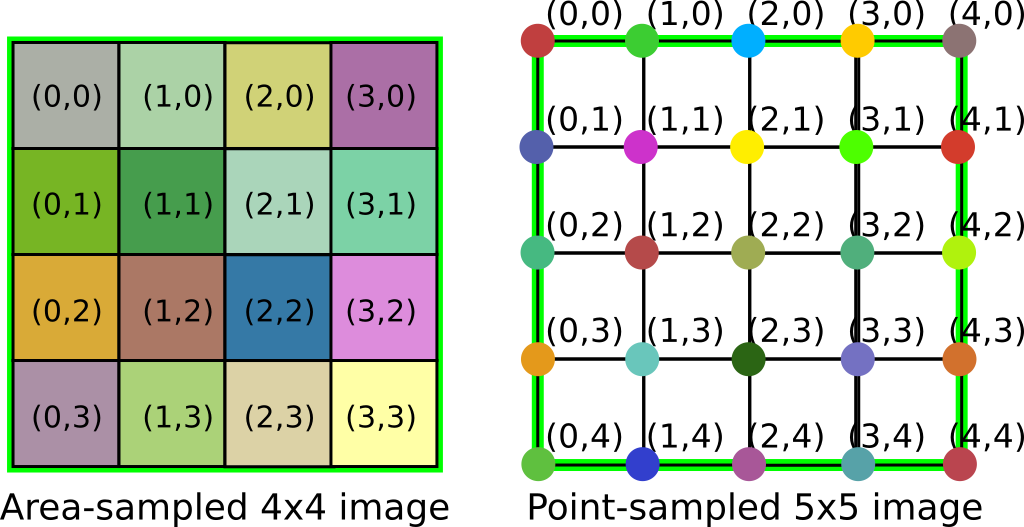

Determining whether an image is area-sampled or point-sampled

The image’s configuration object also indicates whether the image data is of an area-sampled or point-sampled nature, through

the ELcdImageSamplingMode enumeration.

Color imagery obtained with a digital camera, be it RGB or multi-band imagery, is typically area-sampled. In an area-sampled image, each pixel value represents some form of average value for the area covered by the pixel, the average intensity of red, green or blue light within the area for example. Images containing measurement values, such as elevation data or weather data, are more commonly point-sampled. In a point-sampled image, each pixel represents the exact measurement value at a particular coordinate.

Figure 1, “Comparison of area-sampled and point-sampled images” illustrates the distinct composition of an area-sampled image and a point-sampled image. The numbers indicate pixel coordinates, and the green rectangles indicates the bounds of the images. In the area-sampled image, each pixel value is associated with a small, rectangular sub-region of the image. In the point-sampled image, each value is associated with a sample point that lies on an intersection of a horizontal and vertical "grid line".

Given two images with the same pixel dimensions and equal geographical pixel spacing, the area-sampled image will therefore have slightly larger geographical bounds than the point-sampled one. The bounds of the area-sampled image will be the union of the areas covered by all individual pixels, whereas the bounds of the point-sampled image will be the minimal bounds containing all the sample points corresponding to each pixel.

Multi-level images and mosaics

ALcdBasicImage is the main building block for more advanced data structures containing pixel data, such as multi-level images and (multi-level)

image mosaics.

ALcdMultilevelImage

A multi-level image is a collection of ALcdBasicImage instances covering the same geographic area. Typically these images have a different resolution, for example the lower levels

provide a lower-resolution overview of the higher levels. For visualization, the spatial resolution of the images will be

matched to the current scale of the view. The image with the best match will be selected.

The relation between the image levels is defined by the ELcdLevelRelationship, which can be:

-

OVERVIEW: at each level, the image offers a different level of detail for the same data.

-

STACK: the images represent data in the same geographic region, but are otherwise unrelated.

ALcdImageMosaic and ALcdMultilevelImageMosaic

An image mosaic is a grid of multiple ALcdBasicImage instances. Each image in the grid is called a tile. This grid can be sparse: some tiles are not present in the grid. The

tiles may also have different pixel resolutions.

Like ALcdBasicImage, ALcdImageMosaic's configuration object contains an ELcdImageSamplingMode property. All tiles in a mosaic are expected to have the same sampling mode.

A multi-level image mosaic is a collection of ALcdImageMosaic instances, with properties similar to ALcdMultilevelImage.

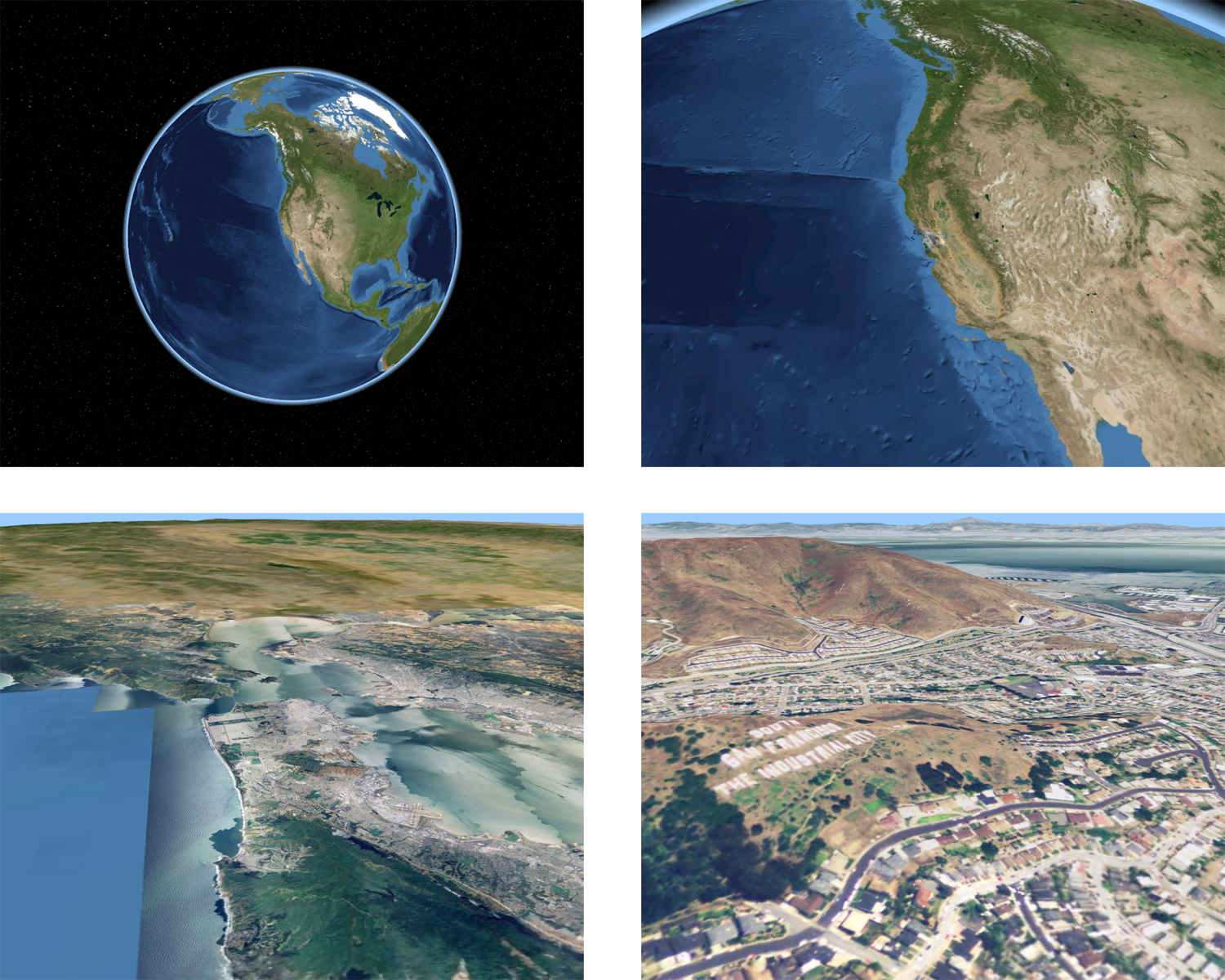

Why use multi-leveled mosaics?

Multi-leveled mosaics allow for multiple imagery resolutions

It is virtually impossible to visualize useful amounts of imagery or elevation data using brute force rendering methods. Because of the increasing availability of satellite imagery and aerial photography with resolutions of less than 1 meter per pixel, the size of the datasets that are typically involved has grown orders of magnitude beyond the amount of memory that is available in typical workstations. The surface area of Earth’s land mass is approximately 150,000,000 km2. To cover this area with 1m resolution imagery would require an image of 150 terapixels. When subdivided into 256x256 pixel tiles, this would require over 2 billion tiles in the image.

Hence, applications need to employ multi-resolution techniques and on-demand loading for the data. This is where multi-level image mosaics are particularly useful.

Sparse tile grids allow for optimal data storage

Another important aspect in this context is that image data providers typically do not offer data with a uniform resolution across the globe. Aerial photography, for instance, may be acquired with sub-meter resolution in populated areas, but not over the oceans.

Although it can be tiled internally, an ALcdBasicImage is always completely populated with pixel data of a uniform resolution. An ALcdImageMosaic, on the other hand, can consist of tiles with varying resolution and does not require all tiles to be present. This allows for optimized data storage, in which tiles are omitted in areas where

no data is available.

In addition, ALcdMultilevelImageMosaic combines support for sparse data with support for multi-resolution representations. Thus, a multilevel mosaic can describe,

for instance, high-resolution insets in low-resolution base data. Popular data sources such as Google Maps, Bing Maps, or

OpenStreetMap use hierarchical tile pyramids that follow this principle. They can therefore be modeled as an ALcdMultilevelImageMosaic instances.

Determining the right level of detail in a multi-level image

Multi-level images are essential for working efficiently with large sets of image data. You can select the image with the right level of detail by checking the image’s pixel density. The right level of detail depends on the context: when you are visualizing an image, for instance, the most appropriate level of detail is the one that most closely matches the current scale of the view. Users of an image typically perform a discrete sampling of pixel values at a certain sampling rate. If the sampling rate is too high with respect to the resolution of the image, the sampling will exhibit a staircase or pixelation effect, as neighboring samples get the same values. If the sampling rate is too low, it will miss details.

As an example, consider a multi-level image of 1000m by 1000m in a UTM grid system. Suppose it contains three simple images: the first one with 100x100 pixels, the second one with 500x500 pixels, and the third one with 1000x1000 pixels. Consequently, those images have pixel densities of 0.01, 0.25, and 1.0 pixels/m2, respectively.

Now, suppose that an algorithm wants to sample pixel values at a rate of 1 sample every 10m (0.1 samples/m) along a line in the given coordinate system. First, the algorithm must select the best image for the job, based on the required pixel density: ideal pixel density = sampling rate2. In this case, the ideal pixel density would be 0.12 = 0.01 pixels/m2. This means that the first image is the perfect choice for the algorithm.

In practice, the image with the ideal pixel density is typically not available. Instead, an available image that approaches

the ideal density is selected. This selection is often based on a few criteria such as required quality and the acceptable

amount of data to be loaded. Both TLcdGXYImagePainter and TLspRasterStyle provide settings to adjust these criteria. See the reference documentation of these classes for more detail.

|

The sampling is often performed in a reference system different from the coordinate system of the raster. If this is the case, you must transform the sampling reference system to the coordinate system of the raster. Otherwise you will not obtain comparable values. Since the transformation is not necessarily linear, the transformed sampling rate is generally just a numerical approximation that is only valid around a given location. |

Creating and applying image operators

LuciadLightspeed provides various ALcdImageOperator operators that can be applied to images, such as cropping, convolution, compositing of two images, and so on. Operators take

a number of inputs, typically one or more images and a number of other parameters, and produce a new ALcdImage as output.

Operators can be chained together to form an ALcdImageOperatorChain. Such operator chains allow you to apply complex sequences of operations to images in one go.

Creating operators

You can apply image operators in two ways:

-

Use the static methods that are available in all concrete

ALcdImageOperatorclasses in thecom.luciad.imaging.operatorpackage. Program: Applying a band select operator to an image illustrates this for an operator that creates an image consisting of the first band of a multi-band image. This way of creating operators is the most convenient, and type-safe, when creating operators programmatically.Program: Applying a band select operator to an imageALcdImage outputImage = TLcdBandSelectOp.bandSelect(inputImage, new int[]{0}); -

Use the operators' generic

apply()methods. Theapply()method accepts anILcdDataObjectas input. Through thisILcdDataObject, all input parameters of the operator are supplied. The operator’sgetParameterDataType()method returns theTLcdDataTypethat theapply()method expects.Program: Creating an operator from its data type illustrates how to use the data type to apply an operator to an image. This approach to creating operators allows for introspection of the operator’s parameters, which is useful when operators need to be serialized/de-serialized or created interactively via a GUI.

Program: Creating an operator from its data typeALcdImageOperator operator = new TLcdBandSelectOp(); ILcdDataObject parameters = operator.getParameterDataType().newInstance(); parameters.setValue(TLcdBandSelectOp.INPUT_BANDS, new int[]{0}); parameters.setValue(TLcdBandSelectOp.INPUT_IMAGE, inputImage); ALcdImage output = operator.apply(parameters);

Chaining operators

As indicated in Creating and applying image operators, operators can be chained together by providing the output image of one operator as input to the next. The most convenient

way to create linear chains of operators is to use the builder provided by ALcdImageOperatorChain. This builder allows you to quickly append a series of image operators to one another. You can then apply such a series of

operators to any number of images by passing them to ALcdImageOperatorChain.apply().

To create more complex operator graphs, which work on multiple images for instance, you can create custom implementations

of ALcdImageOperatorChain.

Available operators

This section provides more background information about each of the available image operators. For more detailed development

information, code examples, and illustrations, see the API reference documentation of the com.luciad.imaging.operator package

TLcdBandMergeOp: merging the bands of multiple images

You can use TLcdBandMergeOp to take the bands of multiple images, and merge them into a single output image. This operation is typically used to create

one multi-band image from several single-band images. For instance, you can take three single-band grayscale images, and

merge them into one image with each grayscale band mapped to an RGB color channel.

The operation will sequentially add the image bands in the order in which they occur in the images: the first band of input image 2 is added after the last band of input image 1, and so on. You can merge up to four image bands.

TLcdBandSelectOp: creating an image from image bands

You can use TLcdBandSelectOp to select one or more color bands from an image, and display just the selected bands in an output image.

You can specify the bands you want as an array of integers, and re-order the selected bands in the array. If you select all

available color bands, the bands will be mapped to the Red-Green-Blue ( color channels.

TLcdBinaryOp: applying binary operations to two images

Using TLcdBinaryOp, you can perform binary operations on two images: by specifying two perfectly overlapping input images, you can add, subtract,

multiply, and divide the values of pixels with an identical position in the two images. Using the MIN and MAX operations,

you can also select the pixel with the highest or lowest value, and use that in the output image.

These binary operations are often part of image processing sequences intended to enhance images in some way. If you have an unclear image, for instance, you could try adding a second image, and perform binary operations on the two images to enhance the clarity of the first image.

TLcdColorConvertOp: changing the color model of an image

The colors of an image are specified in a certain way, determined by the color model linked to the image. Such a color model may be a simple indexed color model, a grayscale color model, or an RGB model, for example. To be able to display an image in different environments, you may need to convert the color model linked to the image to another color model.

To transform the color model of an input image to a different color model, use TLcdColorConvertOp. The operation takes care of all the required transformations to convert the indexed color model of an image to the standard

RGB color space (sRGB), for example.

For a full list of color space conversion options, see the API reference documentation

TLcdColorLookupOp: transforming image colors

You can change the colors in your images to other colors. For this purpose, LuciadLightspeed allows you to set up color lookup tables consisting of color mappings for a color transformation. Next, you can transform the colors in your images to their corresponding colors in the lookup table. This transformation operation is called a color lookup.

To perform a color lookup operation on an input image, use TLcdColorLookupOp. It transforms the colors in the input image to the corresponding colors in a three-dimensional ALcdColorLookupTable. The output image displays the transformed colors.

TLcdCompositeOp: combining two images into one

TLcdCompositeOp combines two images into one image, so that the resulting image covers the union of the bounds of the input image. The second

image overlaps the first image, and may be re-sampled during the image compositing operation.

You can use this operation to overlay two partially overlapping images, and create an image that covers a larger area.

|

|

TLcdConvolveOp: changing an image through convolution

Convolution is a mathematical operation that is frequently applied in image processing to change an image comprehensively. It takes two sets of input values and puts them into linear combinations to produce output values. In LuciadLightspeed image processing, you can combine the pixel values of an input image linearly with a two-dimensional array of numbers, also known as a convolution kernel, to produce an output image.

A TLcdConvolveOp operation can be of use for image sharpening, blurring, edge detection, and so on.

TLcdCropOp: cropping an image

The cropping operation reduces the size of your image by retaining only the selected rectangular portion of an image, and

discarding the rest. In a TLcdCropOp, you can define the position and size of the rectangle that needs to be kept.

Keep in mind that after a cropping operation, the spatial bounds of the new image may be different from the original image.

If you want to bring the cropped image back to the size of the original image, use the TLcdExpandOp operation. This can be useful when you are working with a ALcdImageMosaic object. In an ALcdImageMosaic grid, you are not allowed to change the bounds of the ALcdBasicImage objects constituting an image mosaic.

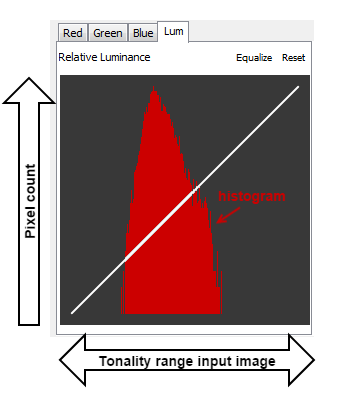

TLcdHistogramOp: creating a histogram of an image

An image histogram is a graph that displays the tonal range of an image on its horizontal axis, and the distribution of tones across that range. Each histogram bin in the graph displays the concentration of pixels for that pixel value, and as such the intensity of that tone in the image.

lightspeed.imaging.multispectral sample

An image’s tonality varies from dark to bright for an entire image, or from high color intensity to low color intensity for a color channel.

To generate such a histogram for an image, use the image as input for a TLcdHistogramOp operation. The result is a one-dimensional ALcdImage of the histogram.

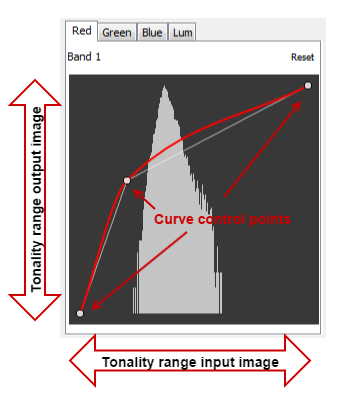

TLcdCurvesOp: using curves to change image color tones or brightness

Changing the distribution of tones across an image or image band can improve its contrast and luminance. Curves provide a mapping for such a re-distribution of tones in an image or image band. They map the tonal distribution of an input image to the tonal distribution of the image resulting from the curves operation.

lightspeed.imaging.multi-spectral sample

Curves are the graphical representation of the mathematical function that describes the relationship between the tonal range of an input image and the tonal range of an output image. They are drawn on a graph displaying the input tonal range on the horizontal axis and the output tonal range on the vertical axis. To start with, the curve will always be a straight line: the tonal distributions of the input and output image are identical.

The curves are defined by their control points. To re-distribute the tonal range of an image, you can change the control points of the curve so that the curve is adjusted, and the tonal distribution shifts to a different part of the tonal range in the output image. If you adjust the luminance curve for an image, the luminance of the output image changes, and the image will become darker or brighter. If you mapped an image band to a color channel, you can adjust the curve on the color channel, and emphasize or de-emphasize certain colors.

The TLcdCurvesOp operation allows you to specify the position of the control points for the curves, and the curve type, for one or more image

bands. You can choose between two curve types, determined by the mathematical function used to draw the curves: piecewise

linear or Catmull-Rom.

TLcdExpandOp: expanding an image

In an expand operation, you make an image larger so that it covers a specified pixel area. To specify the new size of the image, you provide the X and Y coordinates for the new pixel area, as well as its width and height.

|

|

TLcdIndexLookupOp: transforming the colors of a single-band image

The TLcdIndexLookupOp operation is similar to TLcdColorLookupOp, but instead of using a three-dimensional color lookup table to transform image colors, you use a one-dimensional lookup

table. This is useful to map and transform the colors of images with just one band.

For a demonstration, see Attaching a color table to an image with elevation data.

TLcdMedianOp: applying a median to pixel values

The TLcdMedianOp median operator computes the median for an area around each pixel in an image, and then replaces the center pixel value with

the calculated median value. You need to specify the area around the pixel as a square with odd dimensions, for example a

window with both a width and height of 3. Median filters are useful to reduce noise in an image.

TLcdPixelReplaceOp: replacing one pixel value with another

The TLcdPixelReplaceOp operation looks for pixels with a given value and replaces them with another value.

For instance, in a color image you could use it to replace all green pixels with red ones.

Typically, this operator is useful when you want to remove meaningless borders around the edges of an image. You can use TLcdPixelReplaceOp to replace the usually black border color with a transparent color.

|

If the image doesn’t have an alpha band to begin with, you can use |

TLcdPixelRescaleOp: applying a scale and offset to pixel values

You can perform a TLcdPixelRescaleOp operation on an image to apply a scale and offset to all its pixel values. For instance, if you set a scale of 0.5, a pixel

value of 100 will be scaled to a pixel value of 50. As a result of the rescaling operation, the colors or brightness of the

entire image shift linearly.

TLcdPixelTransformOp: applying an affine transformation to pixels

The TLcdPixelTransformOp operation is similar to the TLcdPixelRescaleOp operation: you can apply a scale and offsets to all pixel values in an image, but the result will be an affine transformation

instead of a linear transformation. Instead of providing a single value to rescale pixel values with, you can provide a matrix

of values. The pixel values in the image will be multiplied with the entire matrix, before any additional offsets are applied.

This increases the number of transformations you can apply to a pixel.

TLcdResizeOp: changing the resolution of an image

To change the resolution of an image, use the TLcdResizeOp. Changing the resolution of an image allows you to adapt the image resolution to the zoom level, for instance. You can display

lower image resolutions when a user has zoomed out of the map, and higher image resolutions when a user zooms in.

This image operator allows you to specify double values as scales for adjusting the resolution along both the X axis and the Y axis of the image.

Note that the bounds of the image do not change during this operation, and that the output image covers the same area on the map as the input image.

TLcdSemanticsOp: Adjusting the semantics of an image

Use TLcdSemanticsOp to re-interpret the semantics of an image band, like its data type for example. You can also use the semantics operation

to convert an image’s measurement semantics to color semantics, as demonstrated in Selecting 3 bands from a 7-band LandSat image as RGB.

While you are changing the semantics, you can also scale and translate the semantic values.

For more information about band semantics, see ALcdImage.

TLcdSwipeOp: swiping between two images

The TLcdSwipeOp operation overlays two ALcdBasicImage objects, and adds a line across the resulting image. On the left side of the line, the first image is visible. On the right

side of the line, the second image is visible.

You determine the position of the swipe line by specifying a distance in pixels from the left hand side of the image.

You can use this operation to add an image swiping function in your application. It allows a user to find differences between two images captured at the same location, but at a different point in time, for example.

|

If you are working in a Lightspeed view, and want to set up a swipe operation between two images that reside in different

layers or have distinct references, it is recommended to use a |

Image processing operator examples

This section demonstrates how image operators can be used as building blocks for practical image processing.

Selecting 3 bands from a 7-band LandSat image as RGB

When you are using 7-band Landsat imagery, you typically want to visualize it as Near infrared, or the standard false color composite. This requires the use of bands 4, 3, and 2, where band 4 corresponds to the near infrared band. In this combination, vegetation appears in shades of red.

This use case is illustrated in Program: Image operator for visualizing a 7-band LandSat image as Near infrared.. First you use a band select operator to select the desired bands. Because the LandSat7 image contains measurement data, the result is an image with 3 measurement bands. To visualize the measurement data, we need to convert it to an image with color data. So in the second step you use a semantics operator to convert the image to an RGB image by simply using one band for each of the color channels.

ALcdImageOperatorChain chain = ALcdImageOperatorChain

.newBuilder()

.bandSelect(new int[]{3, 2, 1})

.semantics(

TLcdBandColorSemanticsBuilder.newBuilder()

.colorModel(ColorModel.getRGBdefault())

.buildSemantics()

.subList(0, 3)

)

.build();Attaching a color table to an image with elevation data

When you are using elevation data, you typically visualize it by mapping the elevation values to colors using a color table.

This is illustrated in Program: Image operator for visualizing a elevation data using a color table.. First you create the color map covering the entire range of the image’s data type. Then you use this in a color lookup operator. The operator uses the elevation values to choose a color from the table. The result is an image with color data that can be visualized by the painter.

// Create a color map

Color sea = new Color(0x6AB2C7);

Color grass = new Color(0x5EBC5A);

Color hills = new Color(0x9B6E40);

Color snow = new Color(0xB5A4BA);

TLcdColorMap colorMap = new TLcdColorMap(

new TLcdInterval(Short.MIN_VALUE, Short.MAX_VALUE),

new double[]{Short.MIN_VALUE, 0.0, 100.0, 500.0, 2000.0, Short.MAX_VALUE},

new Color[]{sea, sea, grass, hills, snow, snow}

);

// Create the operator chain

ALcdImageOperatorChain chain = ALcdImageOperatorChain

.newBuilder()

.indexLookup(

TLcdLookupTable.newBuilder().fromColorMap(colorMap).build()

)

.build();Image processing during visualization

You can apply and update the discussed image operators interactively when you are visualizing image data.

Applying image operators in a Lightspeed view

To apply image operator chains to images in a layer, you can use a TLspImageProcessingStyle in conjunction with TLspRasterLayerBuilder. This is illustrated in Program: Applying a filter as a style on a raster layer.

// Decode the model

TLcdGeoTIFFModelDecoder decoder = new TLcdGeoTIFFModelDecoder();

ILcdModel model = decoder.decode("Data/GeoTIFF/BlueMarble/bluemarble.tif");

// Create the operators and style

ALcdImageOperatorChain ops = ALcdImageOperatorChain.newBuilder()

.convolve(new double[]{-1, -1, -1, -1, 9, -1, -1, -1, -1}, 3, 3)

.build();

TLspImageProcessingStyle style = TLspImageProcessingStyle.newBuilder()

.operatorChain(ops)

.build();

// Create a raster layer for the given model and apply the filter style

TLspRasterStyle rasterStyle = TLspRasterStyle.newBuilder().build();

TLspStyler styler = new TLspStyler(rasterStyle, style);

ILspLayer layer = TLspRasterLayerBuilder.newBuilder()

.model(model)

.styler(REGULAR_BODY, styler)

.build();Note that you must use TLspImageProcessingStyle in conjunction with TLspRasterStyle. You can use TLspRasterStyle to adjust the brightness, contrast, and opacity of the result of the processed image, for example.

Applying image operators in a GXY view

To visualize processed images in a GXY view, use the TLcdGXYImagePainter. You can use the setImageOperatorChain() method to apply any ALcdImageOperatorChain to the images visualized in the layer.

Offline processing

In some cases, access to the pixel data of an image is required for reasons other than visualization. You may want to query pixel values for data inspection, for example, or you want to decode, process, and encode image data on disk.

Accessing pixel values through ALcdImagingEngine

The main building block of all ALcdImage implementations is ALcdBasicImage.

As discussed in ALcdBasicImage, ALcdBasicImage is an opaque handle to image data.

To access the data, you need an imaging engine.

To create an engine, you can use the createEngine() factory method in ALcdImagingEngine.

Imaging engines implement ILcdDisposable.

Hence, they need to be disposed after use.

This is illustrated in Program: Creation and disposal of an imaging engine using a try-with-resources construct.

try (ALcdImagingEngine engine = ALcdImagingEngine.createEngine()) {

//do something with the engine

}You can make multiple implementations of imaging engines available, for example, if multiple compute devices (CPU, GPU) are

found. To list all available imaging engine implementations, use ALcdImagingEngine.getEngineDescriptors. A Descriptor reports whether an engine is hardware-accelerated, and evaluates operators on the GPU. It also reports whether an engine

can be used for tasks other than visualization. You can create an engine for a specific descriptor using the ALcdImagingEngine.createEngine(Descriptor) method.

Once an engine has been created, you can query the content of images using the getImageDataReadOnly() method. This is illustrated in Program: Accessing the pixel values of an ALcdBasicImage.

ALcdBasicImagetry (ALcdImagingEngine engine = ALcdImagingEngine.createEngine()) {

ALcdBasicImage output = TLcdBinaryOp.binaryOp(inputBasicImage1, inputBasicImage2, ADD);

int width = output.getConfiguration().getWidth();

int height = output.getConfiguration().getHeight();

Raster outputData = engine.getImageDataReadOnly(output, new Rectangle(0, 0, width, height));

float[] pixel = new float[1]; // Assuming a single band image with float pixel data

for (int j = 0; j < height; j++) {

for (int i = 0; i < width; i++) {

outputData.getPixel(i, j, pixel);

System.out.println("Pixel at " + i + "," + j + ":" + pixel[0]);

}

}

}Imaging engines are safe for use on multiple threads.

Encoding processed images to disk

Processed ALcdBasicImage instances and ALcdMultilevelImage instances can be encoded back to disk with TLcdGeoTIFFModelEncoder. Program: Decoding, processing, and encoding the result illustrates this for an operator that applies a convolution kernel to a decoded GeoTIFF image.

// Decode the GeoTIFF file

TLcdGeoTIFFModelDecoder decoder = new TLcdGeoTIFFModelDecoder();

try (ILcdModel model = decoder.decode("Data/GeoTIFF/BlueMarble/bluemarble.tif")) {

// Convolve the first element

ILcdModel processedModel = new TLcdVectorModel(model.getModelReference(), new TLcdImageModelDescriptor());

Enumeration e = model.elements();

if (e.hasMoreElements()) {

Object o = e.nextElement();

ALcdImage image = ALcdImage.fromDomainObject(o);

if (image != null) {

// Convolve the image using a 3x3 kernel

ALcdImage convolved = TLcdConvolveOp.convolve(

image,

new double[]{-1, -1, -1, -1, 9, -1, -1, -1, -1},

3,

3

);

processedModel.addElement(convolved, ILcdModel.NO_EVENT);

}

}

// Create an imaging engine and encode the filtered result

try (ALcdImagingEngine engine = ALcdImagingEngine.createEngine()) {

TLcdGeoTIFFModelEncoder encoder = new TLcdGeoTIFFModelEncoder(engine);

encoder.export(processedModel, outputTiffFile);

}

}