Multi-node scenarios

Based on the main use cases described in the LuciadFusion multi-node introduction, we discuss how to build upon the basic setup example with a reverse proxy to realize three multi-node scenarios:

-

A Failover scenario

-

A Designated Studio and pre-processing node scenario

-

A Load balancing of services scenario

When we discuss the setup for each scenario, we take all guidelines and limitations into account.

We keep the setups in these scenarios simple, but you are free to mix and match them according to your requirements.

We finish by showing you how you could combine the configurations of the three discussed scenarios in a production setup.

Using an Apache server as the reverse proxy

We build upon our example setup by adding an Apache HTTP server, which we use as the reverse proxy. We install the Apache HTTP server on the same machine as the LuciadFusion instances to keep things simple.

You need to make sure that you can enable modules, include configurations, and restart Apache to follow along with the example setup. The instructions for this depend upon your system, which is why we didn’t include them. See the documentation at https://httpd.apache.org/docs/2.4/, or search online if you need help with Apache HTTP Server.

Remember that you can mix and expand upon configurations if you need to. See Apache references for a list of Apache documentation that you can use as a reference for more advanced configurations.

|

If you want an introduction on how to set up an Apache reverse proxy for a single instance of LuciadFusion and how to add

|

LuciadFusion endpoints available for configuration

Before we get started with the scenarios, make sure you are familiar with the endpoints available in LuciadFusion. We use those to configure the reverse proxy. You can find the list of available endpoints here.

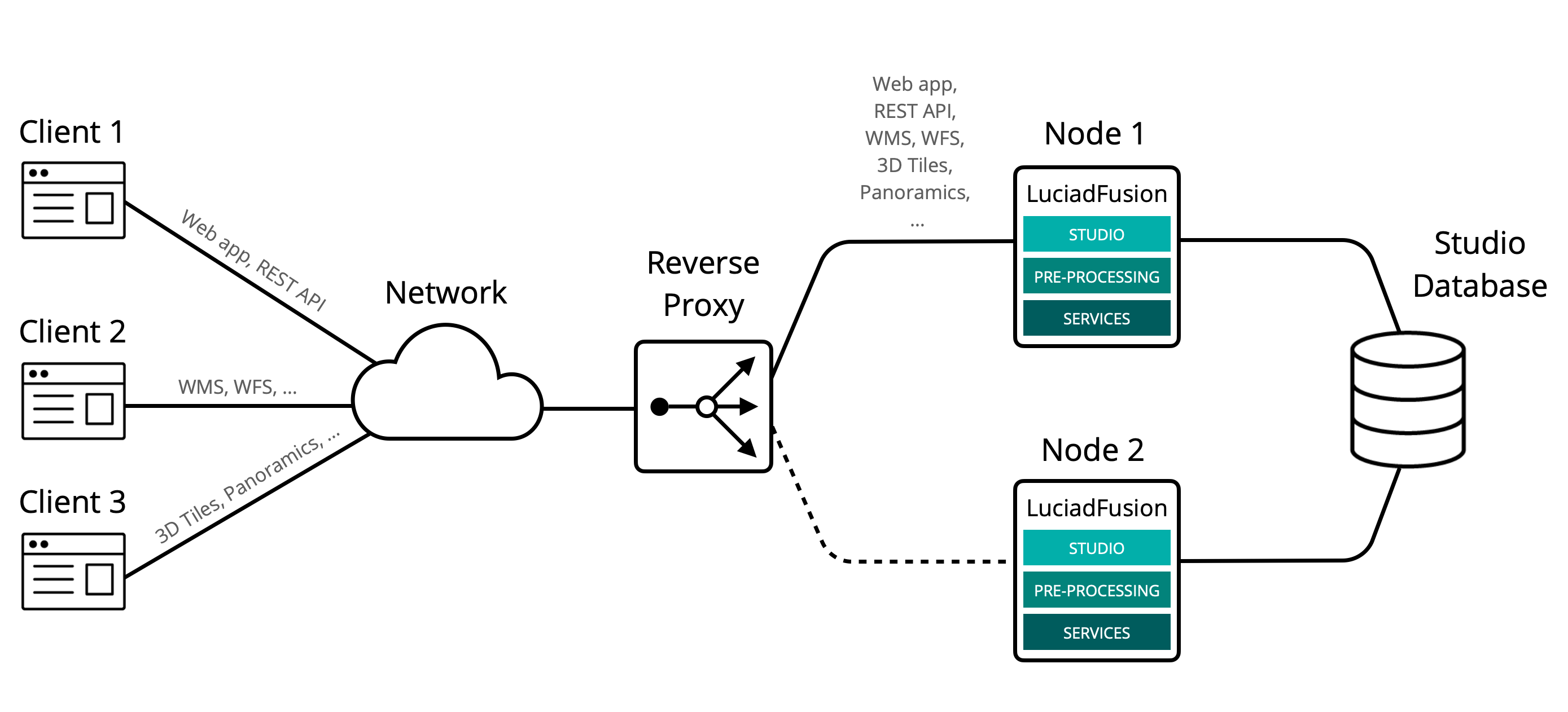

Failover

For this scenario, we use one of the LuciadFusion instances from our example setup as a standby instance. It takes over if the other instance experiences an error from which it can’t immediately recover, like a server, system, hardware, or network error.

To achieve this, we create a group consisting of our two instances. We mark the instance node2, which is running locally on port 8082, as the standby instance.

The reverse proxy forwards all traffic to node1, unless is becomes unavailable due to an error. In that case, it sends all traffic to node2.

Remember from the limitations discussed in guideline 1 that you must log on to the Studio web application again when the reverse proxy switches nodes.

This failover configuration shows how you can configure an Apache HTTP Server to add failover support.

Make sure to enable the mod_proxy, mod_proxy_balancer, and mod_proxy_wstunnel modules when you include this configuration.

The configuration defines a load balancing group, and forwards all requests for services and Studio to that group.

The group includes two members, with node2 marked as a standby through the status=+H. Note that we also defined a second load balancing group with the same members to make sure that notifications keep working

in Studio web application.

After a restart of Apache, you can access the Studio web application at http://localhost:80.

Connect to the WMS service that we created earlier to test the example multi-node setup. It’s available at http://localhost:80/ogc/wms/example_wms.

In a WMS client, test that when you stop node1, the WMS service keeps working.

<Proxy balancer://fusionset>

BalancerMember http://localhost:8081

BalancerMember http://localhost:8082 status=+H

</Proxy>

<Proxy "balancer://fusionnotificationsset">

BalancerMember ws://localhost:8081

BalancerMember ws://localhost:8082 status=+H

</Proxy>

ProxyPass /lts balancer://fusionset/lts

ProxyPassReverse /lts balancer://fusionset/lts

ProxyPass /file balancer://fusionset/file

ProxyPassReverse /file balancer://fusionset/file

ProxyPass /ogc balancer://fusionset/ogc

ProxyPassReverse /ogc balancer://fusionset/ogc

ProxyPass /file balancer://fusionset/file

ProxyPassReverse /file balancer://fusionset/file

ProxyPass /panoramic balancer://fusionset/panoramic

ProxyPassReverse /panoramic balancer://fusionset/panoramic

ProxyPass /hspc balancer://fusionset/hspc

ProxyPassReverse /hspc balancer://fusionset/hspc

ProxyPass /mbtiles balancer://fusionset/mbtiles

ProxyPassReverse /mbtiles balancer://fusionset/mbtiles

ProxyPass /schemas balancer://fusionset/schemas

ProxyPassReverse /schemas balancer://fusionset/schemas

ProxyPass /studio/service/notifications balancer://fusionnotificationsset/studio/service/notifications

ProxyPassReverse /studio/service/notifications balancer://fusionnotificationsset/studio/service/notifications

ProxyPass /studio balancer://fusionset/studio

ProxyPassReverse /studio balancer://fusionset/studio

ProxyPass /actuators balancer://fusionset/actuators

ProxyPassReverse /actuators balancer://fusionset/actuators

ProxyPass /login balancer://fusionset/login

ProxyPassReverse /login balancer://fusionset/login

ProxyPass /api balancer://fusionset/api

ProxyPassReverse /api balancer://fusionset/api

ProxyPass / balancer://fusionset/studio

ProxyPassReverse / balancer://fusionset/studio

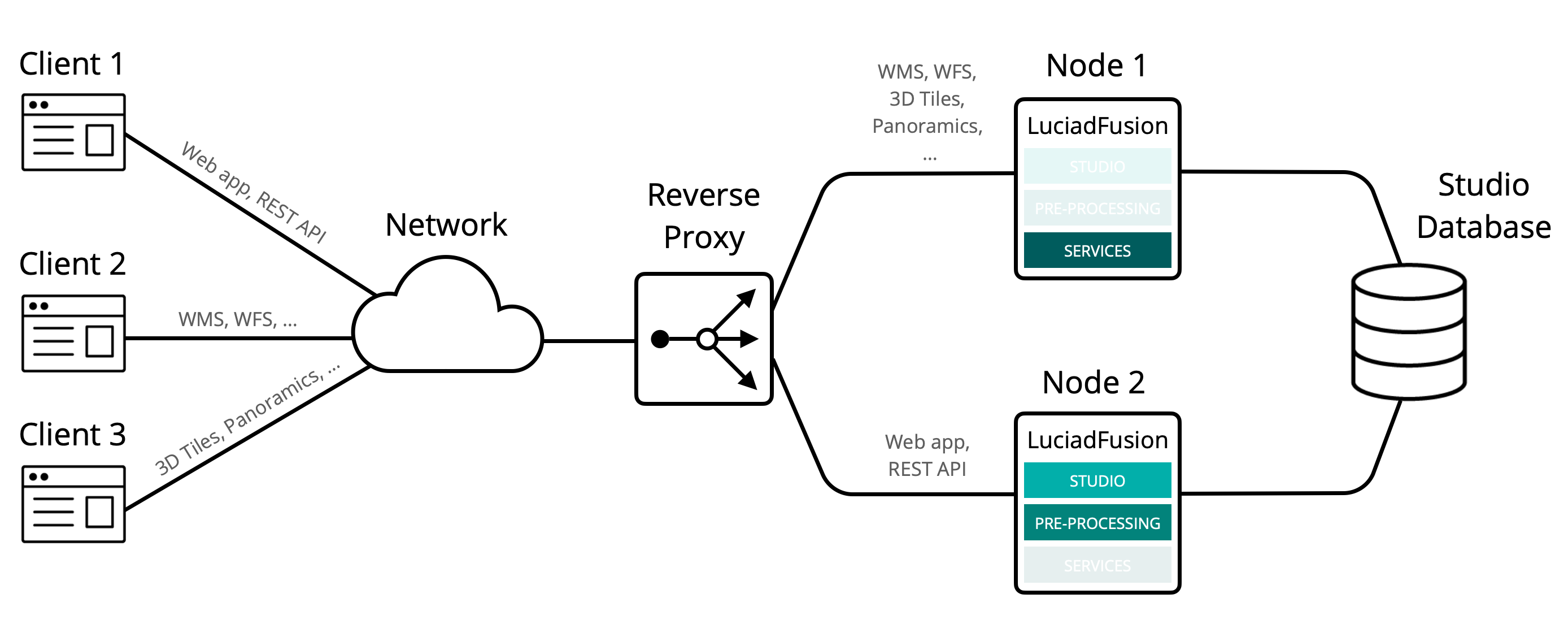

Designated Studio and pre-processing node

In this scenario, we dedicate one LuciadFusion instance to handle all Studio-related requests. Those consist of handling requests from the web application, REST API, and executing all crawl and pre-process jobs. We dedicate another instance to handle all requests from services such as WMS, WFS, and 3D Tiles.

To that end, we route all incoming requests for services in our example setup to the first LuciadFusion instance node1. We route all Studio requests to the second instance node2.

This designated node configuration shows the rules used to configure an Apache HTTP server for this setup.

The benefit of this setup is that it offers a way to separate the demanding load of pre-processing, crawling, and data management from the load of clients consuming the data from services.

If you plan to build on this setup, consider guideline 1. All Studio-related tasks must run on the same node.

It’s impossible, for example, to add an extra node that only runs jobs, or only provides access to the REST API. You must

handle all those tasks on the same instance.

You can distribute the services requests further to distinct nodes, though.

If you have a lot of WMS requests coming from clients, for example, you could add another LuciadFusion instance, and add a

proxy rule that sends all /ogc/wms/ traffic to that node.

ProxyPass /lts http://localhost:8081/lts ProxyPassReverse /lts http://localhost:8081/lts ProxyPass /file http://localhost:8081/file ProxyPassReverse /file http://localhost:8081/file ProxyPass /ogc http://localhost:8081/ogc ProxyPassReverse /ogc http://localhost:8081/ogc ProxyPass /file http://localhost:8081/file ProxyPassReverse /file http://localhost:8081/file ProxyPass /panoramic http://localhost:8081/panoramic ProxyPassReverse /panoramic http://localhost:8081/panoramic ProxyPass /hspc http://localhost:8081/hspc ProxyPassReverse /hspc http://localhost:8081/hspc ProxyPass /mbtiles http://localhost:8081/mbtiles ProxyPassReverse /mbtiles http://localhost:8081/mbtiles ProxyPass /schemas http://localhost:8081/schemas ProxyPassReverse /schemas http://localhost:8081/schemas ProxyPass /actuators http://localhost:8082/actuators ProxyPassReverse /actuators http://localhost:8082/actuators ProxyPass /login http://localhost:8082/login ProxyPassReverse /login http://localhost:8082/login ProxyPass /api http://localhost:8082/api ProxyPassReverse /api http://localhost:8082/api ProxyPass /studio/service/notifications ws://localhost:8082/studio/service/notifications ProxyPassReverse /studio/service/notifications ws://localhost:8082/studio/service/notifications ProxyPass /studio http://localhost:8082/studio ProxyPassReverse /studio http://localhost:8082/studio ProxyPass / http://localhost:8082/studio ProxyPassReverse / http://localhost:8082/studio

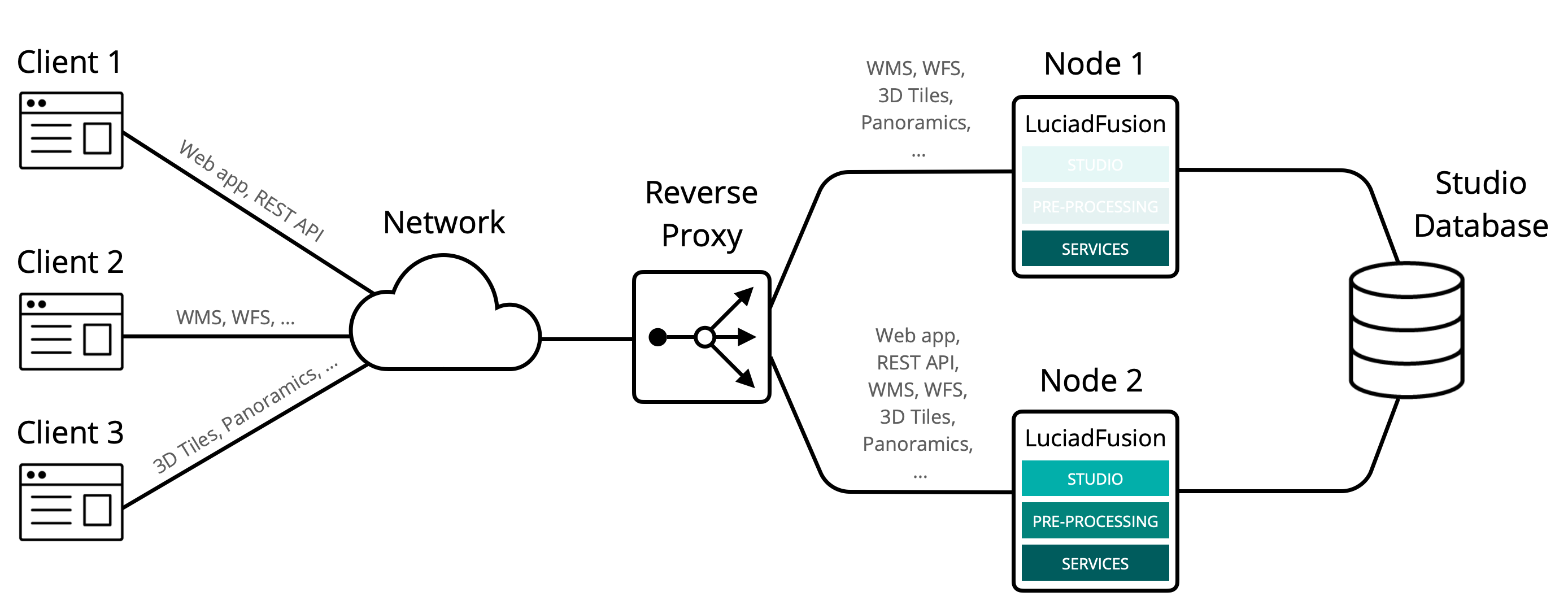

Load balance services

To support LuciadFusion performance under heavy loads, you can load balance the LuciadFusion services.

In this scenario, we configure the reverse proxy to balance between the two instances of our example setup for services requests.

We create a group like in the failover scenario, but instead of marking one node as a standby node, we instruct the reverse proxy to use both nodes at the same time and balance requests between them.

This load balance configuration shows how you can configure the Apache HTTP Server for load balancing services in the example setup.

We chose to balance loads between the two instances based on the number of requests, but Apache also offers other methods.

See https://httpd.apache.org/docs/2.4/mod/mod_proxy_balancer.html for more information. Note that each method is a module that you must enable in the Apache configuration, for example the

module mod_lbmethod_byrequests for the byrequests method.

Also note that we only balance services requests, because we must handle all Studio traffic on a single node. See guideline 1.

After including this configuration and restarting Apache, you can load the example_wms service in a WMS client. The requests will be balanced between node1 and node2.

If one of these two nodes goes down, the reverse proxy will send all traffic to the remaining node.

<Proxy balancer://fusionset>

BalancerMember http://localhost:8081

BalancerMember http://localhost:8082

lbmethod=byrequests

</Proxy>

ProxyPass /lts balancer://fusionset/lts

ProxyPassReverse /lts balancer://fusionset/lts

ProxyPass /file balancer://fusionset/file

ProxyPassReverse /file balancer://fusionset/file

ProxyPass /ogc balancer://fusionset/ogc

ProxyPassReverse /ogc balancer://fusionset/ogc

ProxyPass /file balancer://fusionset/file

ProxyPassReverse /file balancer://fusionset/file

ProxyPass /panoramic balancer://fusionset/panoramic

ProxyPassReverse /panoramic balancer://fusionset/panoramic

ProxyPass /hspc balancer://fusionset/hspc

ProxyPassReverse /hspc balancer://fusionset/hspc

ProxyPass /mbtiles balancer://fusionset/mbtiles

ProxyPassReverse /mbtiles balancer://fusionset/mbtiles

ProxyPass /schemas balancer://fusionset/schemas

ProxyPassReverse /schemas balancer://fusionset/schemas

ProxyPass /actuators http://localhost:8082/actuators

ProxyPassReverse /actuators http://localhost:8082/actuators

ProxyPass /login http://localhost:8082/login

ProxyPassReverse /login http://localhost:8082/login

ProxyPass /api http://localhost:8082/api

ProxyPassReverse /api http://localhost:8082/api

ProxyPass /studio/service/notifications ws://localhost:8082/studio/service/notifications

ProxyPassReverse /studio/service/notifications ws://localhost:8082/studio/service/notifications

ProxyPass /studio http://localhost:8082/studio

ProxyPassReverse /studio http://localhost:8082/studio

ProxyPass / http://localhost:8082/studio

ProxyPassReverse / http://localhost:8082/studio

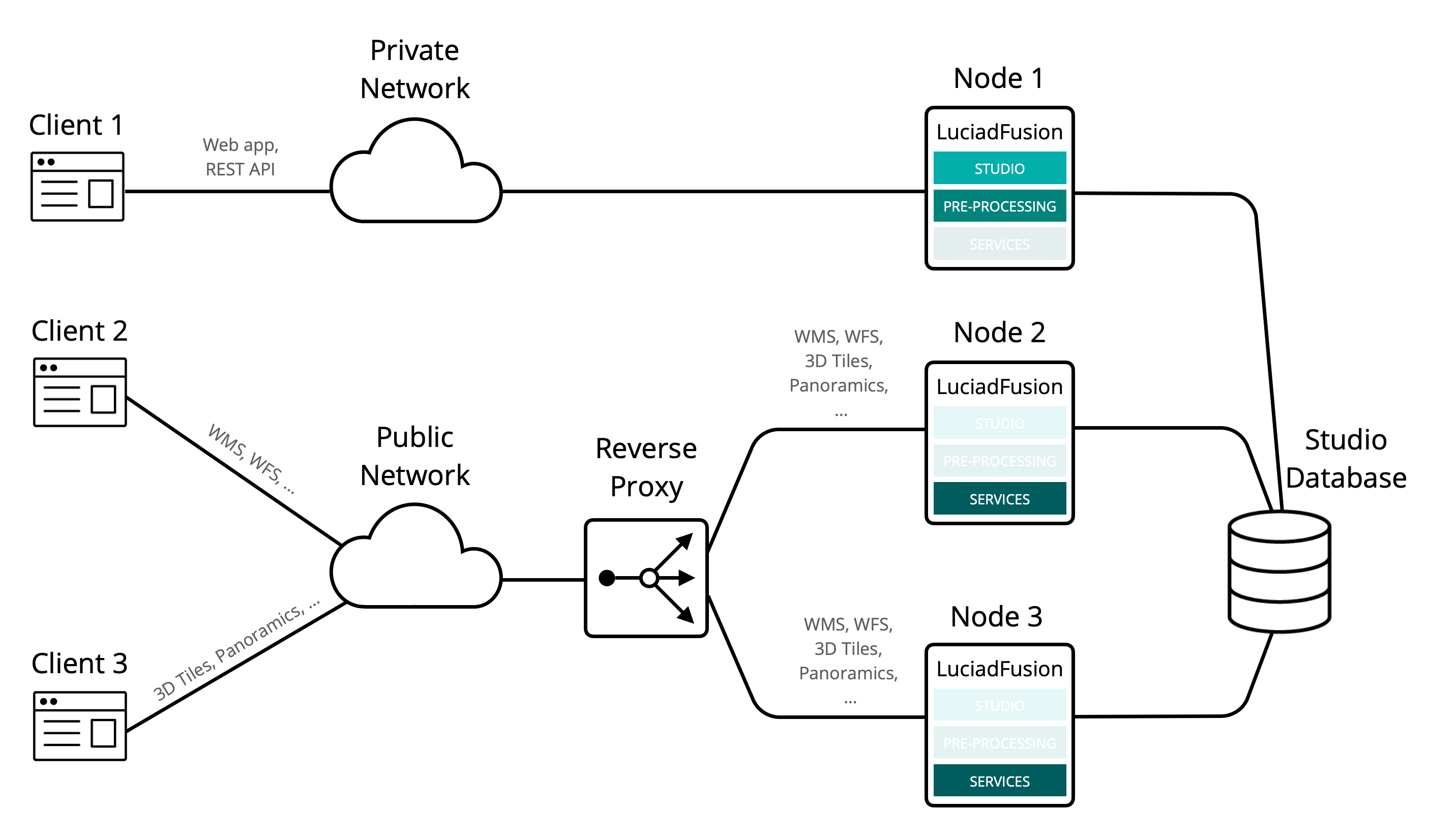

Combining the scenarios in production

Figure 4, “Example of a LuciadFusion multi-node production setup” shows how you could combine the configurations of the three discussed scenarios in a production setup.

Node 1 is accessible to the data managers or integrated applications that use the REST API. Regular clients that just need to consume services can’t access this node.

We placed the two other nodes, Node 2 and Node 3, behind a reverse proxy that balances services requests between the nodes. Anyone can access the reverse proxy.

You can extend this setup by adding failover nodes or more services nodes.