Introducing a new shape: the hippodrome introduces a new shape, the hippodrome, and a set of requirements on how the shape should be handled. The ILcdGXYPainter interface explained through Snapping explained focus on explaining the interfaces ILcdGXYPainter and ILcdGXYEditor. Finally, the article demonstrates how the requirements are met by implementing the interfaces ILcdGXYPainter and ILcdGXYEditor for the hippodrome shape.

|

Note that the hippodrome can be modeled using predefined shapes, such as an |

Introducing a new shape: the hippodrome

IHippodrome, definition of a new shape

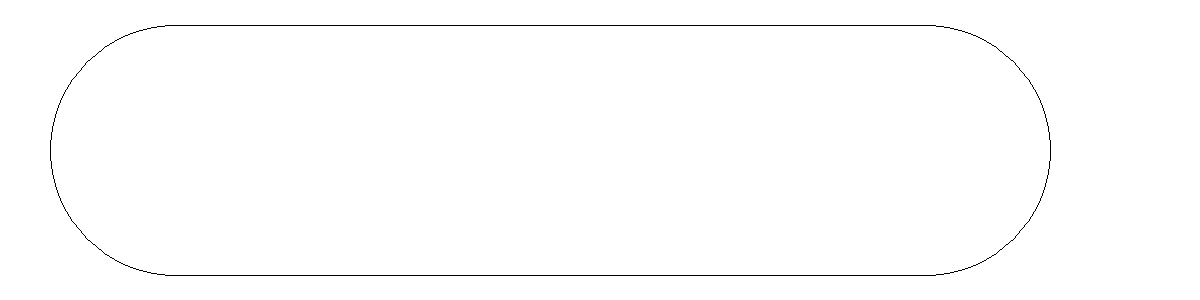

Suppose a new shape should be displayed on the map with the following requirement: it consists of two half circles connected by two lines. Or, more graphically, it would have to look like Figure 1, “Hippodrome graphical specification”.

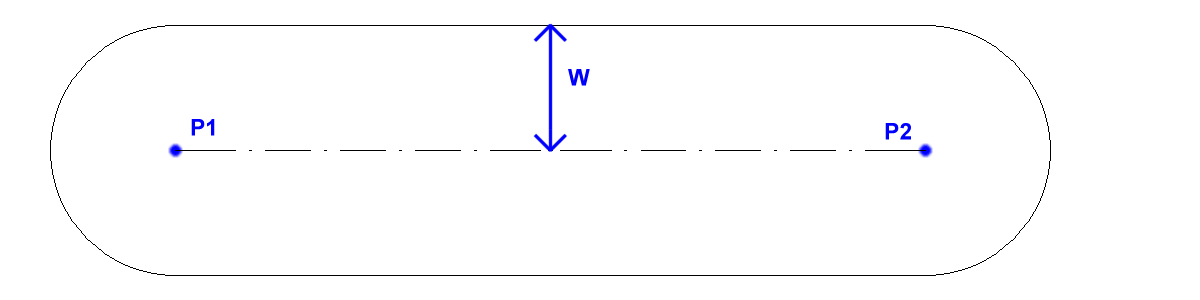

Three basic elements of the shape can be derived: the two points around which the path turns and the radius of the turn around those points. Since the radius of the arcs is equal to the width of the hippodrome, it is referred to as the width.

|

LonLatHippodrome and XYHippodrome demonstrates that this is not necessarily true. |

The IHippodrome thus contains three methods which define the shape:

-

getWidthreturns the width of the hippodrome, W in Figure 2, “Defining elements of an hippodrome” -

getStartPointreturns one of the turning points, P1 in Figure 2, “Defining elements of an hippodrome” -

getEndPointreturns the other turning point, P2 in Figure 2, “Defining elements of an hippodrome”

IHippodrome extends ILcdShape. While this is not required, it allows to take full advantage of the API, for example, IHippodrome can then be in a TLcd2DBoundsIndexedModel instead of in a

TLcdVectorModel.

ILcdShape contains methods to return the bounds of an object and to check if a point or a location is contained in the object. Since

the hippodrome has quite a simple geometry, implementing these methods is straightforward.

LonLatHippodrome and XYHippodrome

IHippodrome does not define how the points and the width should be interpreted. This is defined by the implementations:

LonLatHippodrome for geodetic reference systems and XYHippodrome for grid reference systems.

The major difference is the path between the start point and the end point. For an XYHippodrome the path between the start and end point is a straight line on a Cartesian plane. For a LonLatHippodrome the path between the start point and the end point is situated on an ellipsoid and is therefore a geodesic line. The consequences

are:

-

The angle from start to end point and the angle from end point to start point differs for a

LonLatHippodrome -

Since the angles are different, calculating the location of the points at the ends of the arcs requires different computations for

LonLatHippodromeandXYHippodrome -

A

LonLatHippodromeis situated on the ellipsoid. When the arcs are connected with geodesic lines on the ellipsoid, the width of theIHippodromewill not be a constant along these lines, and the connection of the arc with the line is not C1 continuous

|

The classes described here are only provided as a sample. If you need a hippodrome implementation that does not have

the limitations described above, see |

Including the methods to retrieve the angles and the contour points in the interface hides these differences and reduces the

complexity of the ILcdGXYPainter and ILcdGXYEditor. The interface IHippodrome therefore also has the following methods:

-

getContourPointreturns the point at the start or the end of an arc. -

getStartEndAzimuthreturns the azimuth, or angle of the line from the start point to the end point, at the start point. -

getEndStartAzimuthreturns the angle of the line from the end point to the start point, at the end point.

These methods return elements which can be derived from the three defining elements. Making them available in the interface hides the complexity of computing their values. Adding these methods also facilitates caching these values, which need only be recomputed when one of three defining elements changes.

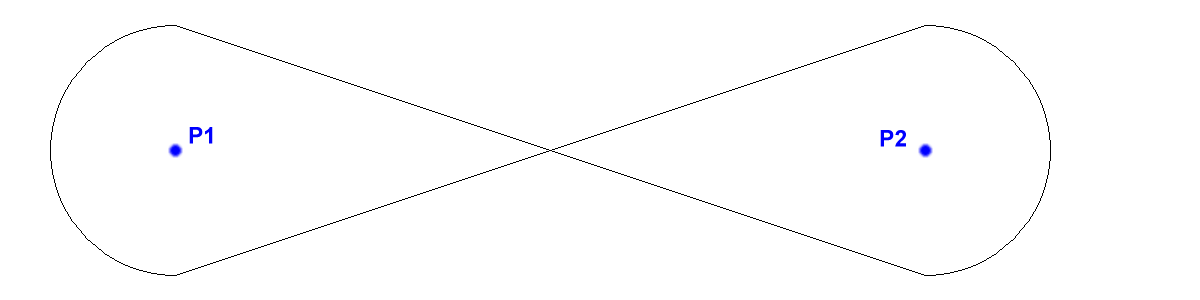

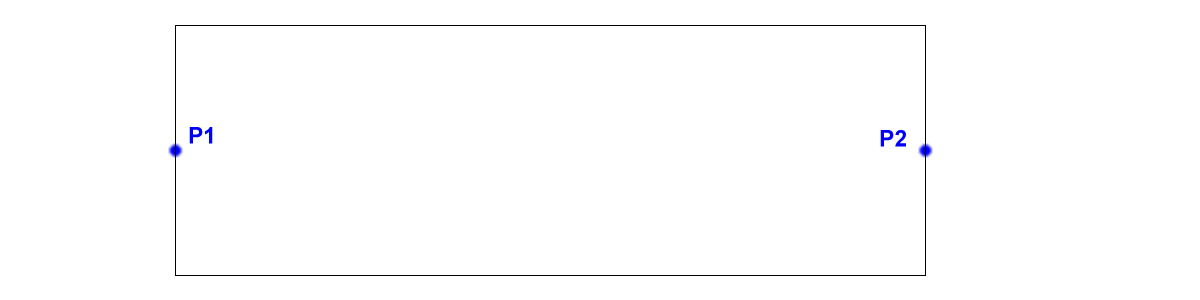

Representations of shapes

Although the requirements above lead to the three elements constituting the IHippodrome shape, these elements could also be rendered differently, for example, see Figure 3, “Alternative rendering for an IHippodrome” and Figure 4, “Alternative rendering for an IHippodrome”. The implementation of the ILcdGXYPainter for the IHippodrome defines how the object is rendered.

IHippodrome

IHippodromeCreating an IHippodrome

Next to visualizing an IHippodrome, it should also be possible to create an IHippodrome graphically. The IHippodrome should be created in three different steps, which determine:

-

the location of the first point

-

the location of the second point

-

the width

After the location of the first point has been specified by a mouse click, or by touch input for example, a line should be

drawn between the first point and the location of the input pointer, the mouse pointer for example. The line indicates where

the axis of the IHippodrome is located. Once the second point has been specified, an IHippodrome should be displayed. The hippodrome width is such that the hippodrome touches the input pointer.

To allow setting the location of the points and setting the width of the IHippodrome, the interface has two methods:

-

moveReferencePointmoves one of the turning points -

setWidthchanges the width of the hippodrome

Editing an IHippodrome

It should be possible to edit an IHippodrome, that is to change the values of one (or more) of its defining elements. Fulfilling the creation requirements already provides

methods to do this. On top of that, one should be able to move the IHippodrome as a whole. This requirement is covered by making IHippodrome extend ILcd2DEditableShape.

Snapping to/of an IHippodrome

Creating or editing an IHippodrome graphically is not very accurate since it is limited to the length one pixel on the screen corresponds to. Though that length

can be calculated very accurately, changing for example the location of the first point of the IHippodrome is only possible in steps of that length. Therefore two requirements are posed:

-

It should be possible to create or edit an

IHippodromeso that its start point or end point coincides with a point of another shape on the map. -

Conversely, it should be possible for another shape, when is it being edited or created to have one or more of its points coincide with the start point or the end point of the

IHippodrome.

The process of making the points coincide is called snapping. No methods should be added to the IHippodrome to comply with this requirement, since moving the points of the IHippodrome is already possible. It is up to the actor who controls the move to ensure that the move results in coincident points.

The ILcdGXYPainter interface explained

Painting and editing domain objects in a GXY view explains how a layer is rendered by selecting the objects that need to be painted, retrieving the painter for those objects

one by one, and instructing the painter to render the object in the view. The painter may be instructed to paint the object

differently depending on the circumstances: an object may have been selected, or it is ready to be edited, … . These circumstances

are expressed in the painter modes. These modes pertain to all ILcdGXYPainter implementations and should not be confused with style modes which pertain to single ILcdGXYPainter implementations, like TLcdGXYPointListPainter.POLYGON.

ILcdGXYPainter modes

The interface ILcdGXYPainter defines eight painter modes which can be divided into three groups depending on their semantics:

Status of the object in the view

-

BODY: the basic mode, indicates that the body of the object should be painted. -

HANDLES: indicates that the handles of the object should be painted. The handles are marker points on the representation of an object. They can be used for editing. -

SNAPS: indicates that targets for snapping should be highlighted. Snapping is a mechanism to ensure that multiple shapes have points at the exact same location. This mechanism is further explained in Snapping explained.

Selection status of the object in a layer

Depending on the selection status of an object in a layer it is rendered differently.

-

DEFAULT: the default, not selected state. -

SELECTED: indicates that the object should be rendered as selected. This usually implies some kind of highlighting.

User interaction

Through the TLcdGXYNewController2 and the

TLcdGXYEditController2 the user can interact with the objects in a model. The former creates a new object, the latter edits it.

A TLcdGXYEditController2 calls an ILcdGXYPainter with the following modes while in the process of editing a shape, so that the user gets visual feedback about the execution

of the editing operation:

-

TRANSLATING: the first editing mode. This mode is typically used for repositioning a shape as a whole. -

RESHAPING: the second editing mode. This mode is typically used when a part of the shape is being adapted.

While the names of the editing modes, TRANSLATING and RESHAPING, indicate what action could be undertaken on the object being edited, they should not be interpreted as exact definitions

but rather as guidelines on how the object should be edited. An example can clarify this: when translating one ILcdPoint in an ILcdPointList, the

TLcdGXYEditController2 calls the TLcdGXYPointListPainter with the TRANSLATING mode. However, one could also argue that the shape as a whole is being reshaped.

The TLcdGXYNewController2 calls an ILcdGXYPainter with the CREATING mode when a shape is in the process of being created:

-

CREATING: creation mode. While creating a new shape, the user should see the current status of the shape.

ILcdGXYPainter methods

The ILcdGXYContext parameter

ILcdGXYContext is a utility interface to hold all information necessary to render an object. It is passed in methods which require this

information, such as paint, boundsSFCT and isTouched. It is also passed to some ILcdGXYEditor methods.

It contains:

-

The

ILcdGXYViewwhere the drawing or editing occurs. This can be helpful for, for example, retrieving the scale. Some objects might be painted differently depending on the scale. -

the

ILcdGXYLayerand therefore theILcdModelthe object to be painted belongs to. From theILcdModel, you can retrieve the model’s reference, which might be useful when not all operations can be abstracted into the shapes interface. Calculating the distance (in meters) of a point to the axis of anIHippodromeis an example of such an operation: it depends on the model reference theIHippodromeis defined in, though it can hardly be regarded as a functionality that should be part ofIHippodromeas a shape. -

The

Graphicson which to perform the rendering. -

The

ILcdModelXYWorldTransformationand theILcdGXYViewXYWorldTransformation. Both transformations are required to determine the exact location of the mouse in model coordinates or to define the location in theILcdGXYViewcomponent where to draw an object. For a detailed explanation, refer to Transformations between coordinate systems. -

The current location of the mouse and its translation (for example, mouse drag) in view coordinates when this information is required. This is useful to interpret the user’s last interaction.

-

Snapping information: the snap target and the layer the snap target belongs to. This is explained in Snapping explained.

-

An

ILcdGXYPento support drawing and editing on aGraphics. The pen provides an abstraction of low level rendering operations, which hides some of the complexities depending on model references.

TLcdGXYContext, the main implementation of ILcdGXYContext, provides support for multiple input points and multiple snapping targets. This is for example used when handling multi-touch

input.

paint

This method is responsible for rendering the object in the view in all possible modes. This includes painting the object

while the user is interacting with the object using controllers; in that case controllers typically render a preview of what

the result of the interaction would be using the ILcdGXYPainter. Only at the end of the user interaction (usually when the mouse is released, or when a touch point is removed) the ILcdGXYEditor is called to perform the actual editing on the object. This usually implies a tight coupling with the ILcdGXYEditor, which is the main reason why most ILcdGXYPainter implementations in LuciadLightspeed also implement ILcdGXYEditor.

boundsSFCT

boundsSFCT adapts a given bounds object so that it reflects the bounds of the shape when it would be rendered given the ILcdGXYContext passed. This is called the view bounds of the object. The view bounds' coordinates are view coordinates, expressed in pixels.

boundsSFCT can be called when checking if an object should be painted. For performance reasons an ILcdGXYView wants to paint as little objects as possible. To select the objects that should be painted, two mechanisms exist, based on

the model contained in a layer.

-

When an

ILcdGXYLayerhas anILcd2DBoundsIndexedModel, its objects are required to beILcdBounded. By transforming theILcdGXYViewboundary, the view bounds, to model coordinates, it is straightforward to check if an object will be visible in theILcdGXYViewor not. -

When the

ILcdGXYLayercontains another type ofILcdModelthis mechanism cannot be applied. TheILcdGXYViewthen relies on theILcdGXYPainterfor an object to provide reliable view bounds of the object. These bounds are then compared to the view’s view bounds, which amounts to comparing it to the view’s size.

The latter mechanism implies that this method could be called frequently, for every paint for every object in the

ILcdModel, and that its implementation should be balanced between accuracy and speed. An accurate implementation ensures that the object

will not be painted if it is outside the ILcdGXYView boundary. If the shape is complex, however, it might be costly to compute the object’s view bounds accurately and it may

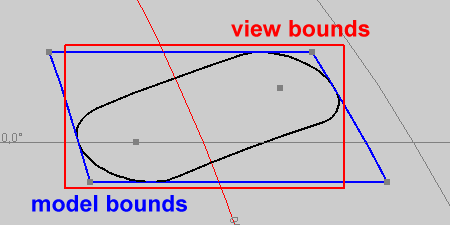

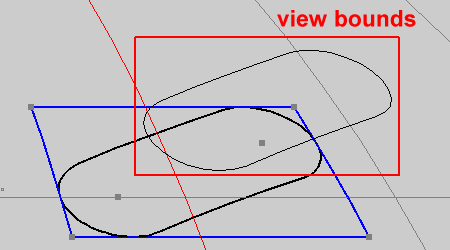

be more economic to have a rough estimate of the object’s view bounds. Figure 5, “Model bounds and view bounds of an IHippodrome” illustrates a view bounds and the model bounds of an IHippodrome.

IHippodromeThe object’s view bounds also depend on the ILcdGXYPainter mode passed: boundsSFCT should for example, take into account user interaction with the shape. Figure 6, “Model bounds and view bounds of a translated IHippodrome” illustrates how the view bounds changes when the shape is being moved. Remember that the ILcdGXYPainter only depicts a preview of the result of the user interaction. The view bounds returned by the ILcdGXYPainter return the bounds of the object rendered as if the user interaction had already taken place. The object’s model bounds do not change as the object itself is not changed.

IHippodromeisTouched

This method checks if the object’s representation is touched by the input. The input location and displacement typically need

to be taken into account. They can be retrieved from the ILcdGXYContext passed. User interaction often needs to be interpreted locally, that is, on the object that is touched by the mouse. This

method is generally implemented with greater accuracy than the boundsSFCT method. It may include a call to the boundsSFCT

method for performance reasons: if the input point is not within the bounds of the object’s representation, it is surely not

touching the object. Though this method does not specify which part of the shape is being touched, that information may be

made public in implementation specific methods.

The ILcdGXYEditor interface explained

The ILcdGXYEditor executes in the model space what the ILcdGXYPainter displays in the view space. As the ILcdGXYEditor is called by

TLcdGXYEditController2 and

TLcdGXYNewController2

to execute the user interaction on the shape, it is less complex than the ILcdGXYPainter interface.

ILcdGXYEditor modes

Analogously to the ILcdGXYPainter, the ILcdGXYEditor defines a number of modes. Depending on the mode, the ILcdGXYEditor should react differently to user interaction. Since the ILcdGXYPainter provides a preview of what the ILcdGXYEditor will do, the modes are closely linked to the ILcdGXYPainter modes.

Creation modes

Since the creation process can be complex and additional interaction may be required at the beginning or at the end of the creation process, three creation modes are provided:

-

START_CREATION -

CREATING -

END_CREATION

Editing modes

Two editing modes are provided, the counterparts of the ILcdGXYPainter modes RESHAPING and TRANSLATING:

-

RESHAPED -

TRANSLATED

The names of the ILcdGXYEditor modes indicate that the ILcdGXYEditor is responsible for processing the result of the user interaction, whereas the ILcdGXYPainter is responsible while the user interaction is taking place.

ILcdGXYEditor methods

edit

This method carries out the change on the shape requested by the user. It retrieves the information of the user interaction

(being the input position and displacement) from the ILcdGXYContext, and the intention of the change by interpreting the mode. To ensure an intuitive user experience, the result of the change

should be a shape that resembles the one rendered by the corresponding ILcdGXYPainter during the user interaction.

getCreationClickCount

The ILcdGXYEditor creation mode passed to the ILcdGXYEditor depends on the value returned by the getCreationClickCount method. Controllers may keep a log of the number of clicks and compare that to the creation click count.

Common methods in ILcdGXYPainter and ILcdGXYEditor

Both interfaces share a number of methods, which are mainly utility methods.

-

setObjectandgetObjectset and return the object theILcdGXYPainter(resp.ILcdGXYEditor) should be painting (resp. editing). -

addPropertyChangeListenerandremovePropertyChangeListenerenable other classes to be notified of changes in a number of properties of theILcdGXYPainterorILcdGXYEditor. A typical example is a map legend implementation which is notified of changes in the color a painter uses to paint an object. -

getDisplayNameprovides a textual representation of theILcdGXYPainteror theILcdGXYEditor.

Snapping explained

Snapping is an interaction process with three actors: an ILcdGXYPainter, an ILcdGXYEditor and either the

TLcdGXYEditController2 or the

TLcdGXYNewController2. The objective is to visually edit or create a shape so that part of it is at the exact same location as part of another

shape. The shapes do not share that part to ensure that consequent editing of either of the shapes does not influence the

other shape.

Snapping methods in ILcdGXYPainter

supportSnap

supportSnap returns if it is possible to snap to (a part of) the shape that is rendered by this ILcdGXYPainter. If snapping is supported, the paint method should provide the user a visual indication whenever a snap target is available.

snapTarget

The snap target is the part of the shape that is made available for snapping. This may change depending on the context passed.

Most implementations return only that part of the shape that is being touched given the ILcdGXYContext passed in this method, for example the

TLcdGXYPointListPainter returns the

ILcdPoint of the

ILcdPointList which is touched by the mouse pointer.

Snapping methods in ILcdGXYEditor

acceptSnapTarget

The user may not want the shape being edited or created to snap to any target provided by a painter. acceptSnapTarget enables filtering on the objects returned as snap target. Care should be taken that the snap target returned by an ILcdGXYPainter is not necessarily an object contained in a model for example, it might be just one

ILcdPoint

of an ILcdPointList. As such it might not be possible to check for example, on the features of the object that contains the snap target.

Use of snapping methods

The snapping methods are called by the TLcdGXYNewController2 and the TLcdGXYEditController2. Both controllers contain

an ILcdGXYLayerSubsetList, a list of

objects sorted per ILcdGXYLayer, called the snappables. Only objects in the snappables are passed to the ILcdGXYEditor.

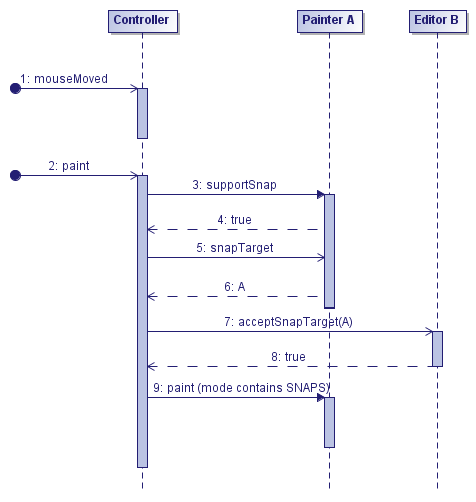

What are the steps in the object snapping process?

Figure 7, “Snapping: sequence of calls when moving the mouse” shows the sequence of method calls in an ILcdGXYView where an object B is in the process of being edited or created and the mouse is moving near an object A. The parties involved

are:

-

the

TLcdGXYNewController2orTLcdGXYEditController2active on the view, referred to asController. It contains the object A in its snappables. -

the

ILcdGXYPainterfor the object A, an object beneath the current input point -

the

ILcdGXYEditorfor the object B, an object which is in the process of being created or edited

When the input point(s) move(s) (as illustrated by the mouseMoved call in Figure 7, “Snapping: sequence of calls when moving the mouse”), the Controller interprets the move(s) and then requests the ILcdGXYView it is active on to repaint itself. At the end of the paint cycle, after all ILcdGXYLayer objects have been rendered, the method paint of Controller is called.

The Controller traverses its snappables, and checks:

-

If the

ILcdGXYPaintersupports snapping for that object in that layer -

If the

ILcdGXYPainterreturns a snap target in the given context

until a snap target, in this case A, is returned. If the snap target is accepted by the object B editor, the object A is painted

with the SNAPS mode, indicating that it is available for snapping.

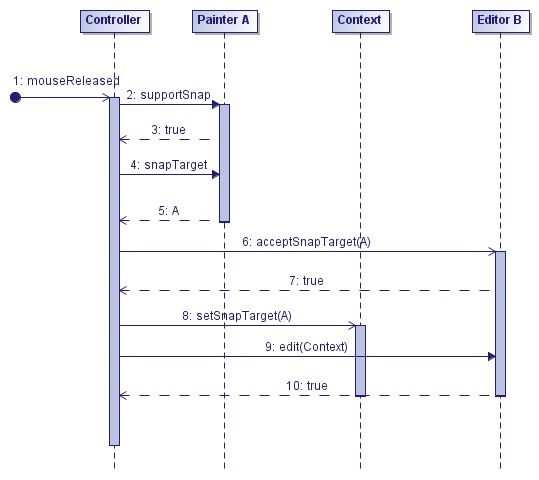

Figure 8, “Snapping: sequence of calls when releasing the mouse” shows the sequence of method calls when object B is edited by snapping to object A. The process is initiated by a mouseReleased call on the Controller. The Controller performs the same checks as above, but when the snap target is accepted by the object B ILcdGXYEditor it sets the snap target (and its layer, not shown here) to a Context, which is then passed to the ILcdGXYEditor for editing.

Supporting multiple model references

Two shapes that are at the exact same location do not necessarily have their coordinates expressed in the same model reference.

This should be taken into account when snapping to a shape: appropriate transformations should be applied to compute the new

coordinates of the point in the snapping shape’s reference. The information required to set up the transformations is contained

in the ILcdGXYContext passed to the edit method.

Painting an IHippodrome

This section covers rendering the body of an IHippodrome in a basic state, that is, not being edited or created.

Constructing an ILcdAWTPath with an ILcdGXYPen

Objects to be rendered are expressed in model coordinates, while rendering on the Graphics requires view coordinates. The interface ILcdGXYPen provides basic rendering operations (for example, drawing lines, arcs) using model coordinates while hiding the complexity

of coordinate transformations.

An ILcdAWTPath defines a path in AWT coordinates. It has useful methods for implementing an ILcdGXYPainter. An ILcdAWTPath is capable of:

-

rendering itself as a polyline or a polygon on a

Graphics -

calculating the enclosing rectangle, in view coordinates

-

detecting if it touches a given point, given in view coordinates

Many ILcdGXYPainter implementations construct an ILcdAWTPath using an ILcdGXYPen and then instruct the AWT path to render itself on the Graphics.

An ILcdAWTPath for an IHippodrome

Program: Constructing the ILcdAWTPath for an IHippodrome shows how the ILcdAWTPath of an IHippodrome is constructed. The ILcdAWTPath is cleared using the reset method, and is then constructed in four steps:

-

the line between the start-upper and end-upper point is added to the

ILcdAWTPath -

the arc around the end point is added to the

ILcdAWTPath -

the line between the end-lower and start-lower point is added to the

ILcdAWTPath -

the arc around the start point is added to the

ILcdAWTPath

Every step checks if the previous step caused a TLcdOutOfBoundsException. If so, the ILcdGXYPen moves to the next point that would not cause a TLcdOutOfBoundsException and resumes constructing the ILcdAWTPath. Note that by using the ILcdGXYPen, adding a line or an arc, expressed in model coordinates, to the ILcdAWTPath is very simple.

ILcdAWTPath for an IHippodrome (from samples/gxy/hippodromePainter/GXYHippodromePainter)

private void calculateContourAWTPathSFCT(IHippodrome aHippodrome,

ILcdGXYContext aGXYContext,

ILcdAWTPath aAWTPathSFCT) {

ILcdGXYPen pen = aGXYContext.getGXYPen();

ILcdModelXYWorldTransformation mwt = aGXYContext.getModelXYWorldTransformation();

ILcdGXYViewXYWorldTransformation vwt = aGXYContext.getGXYViewXYWorldTransformation();

// Resets the current position of the pen.

pen.resetPosition();

// reset the AWT-path

aAWTPathSFCT.reset();

// a boolean to check if a part of the hippodrome is not visible in the current world reference.

// we try to paint as much as possible.

boolean out_of_bounds = false;

try {

// moves the pen to the startUpper-point of the hippodrome

pen.moveTo(aHippodrome.getContourPoint(IHippodrome.START_UPPER_POINT), mwt, vwt);

// append a line to the endUpper-point

pen.appendLineTo(aHippodrome.getContourPoint(IHippodrome.END_UPPER_POINT),

mwt, vwt, aAWTPathSFCT);

} catch (TLcdOutOfBoundsException ex) {

out_of_bounds = true;

}

try {

if (out_of_bounds) {

pen.moveTo(aHippodrome.getContourPoint(IHippodrome.END_UPPER_POINT), mwt, vwt);

out_of_bounds = false;

}

// append an arc using the endpoint as center and taking into account the backward

// azimuth of the hippodrome

pen.appendArc(aHippodrome.getEndPoint(), aHippodrome.getWidth(),

aHippodrome.getWidth(), 270 - aHippodrome.getEndStartAzimuth(),

-aHippodrome.getEndStartAzimuth(), -180.0, mwt, vwt, aAWTPathSFCT);

} catch (TLcdOutOfBoundsException e) {

out_of_bounds = true;

}

try {

if (out_of_bounds) {

pen.moveTo(aHippodrome.getContourPoint(IHippodrome.END_LOWER_POINT), mwt, vwt);

out_of_bounds = false;

}

// append a line to the startLower-point

pen.appendLineTo(aHippodrome.getContourPoint(IHippodrome.START_LOWER_POINT),

mwt, vwt, aAWTPathSFCT);

} catch (TLcdOutOfBoundsException e) {

out_of_bounds = true;

}

try {

if (out_of_bounds) {

pen.moveTo(aHippodrome.getContourPoint(IHippodrome.START_LOWER_POINT), mwt, vwt);

}

// append an arc using the startPoint as center and taking into account the forward

// azimuth of the hippodrome.

pen.appendArc(aHippodrome.getStartPoint(), aHippodrome.getWidth(),

aHippodrome.getWidth(), 90.0 - aHippodrome.getStartEndAzimuth(),

-aHippodrome.getStartEndAzimuth(), -180.0, mwt, vwt, aAWTPathSFCT);

} catch (TLcdOutOfBoundsException e) {

// we don't draw the last arc.

}

}Improving performance: ILcdGeneralPath and ILcdCache

LuciadLightspeed coordinate reference systems shows that transforming model coordinates to view coordinates requires two transformations:

-

an

ILcdModelXYWorldTransformationto convert model coordinates to world coordinates -

an

ILcdGXYViewXYWorldTransformationto convert world coordinates to view coordinates

While the first step can be quite a complex calculation, taking into account an ellipsoid and a projection, the second step

consists of a scaling, a rotation and a translation. All these operations can be executed in a small number of additions and

multiplications. Since the elements that make up the complexity of the first transformation (the model reference of the object

and the world reference of the ILcdGXYView) do not change often, it is advantagous to cache the world coordinates, thus eliminating the costly first step. To this end,

two interfaces are available: ILcdGeneralPath to collect the world coordinates and ILcdCache to cache an {ILcdGeneralPath}.

ILcdGeneralPath is the world equivalent of ILcdAWTPath in the sense that it stores world coordinates. An ILcdGeneralPath is constructed using an ILcdGXYPen, analogous to constructing an ILcdAWTPath. An ILcdAWTPath can be derived from an ILcdGeneralPath using the method appendGeneralPath of ILcdGXYPen.

ILcdCache enables storing information key-value pairs for caching purposes. The combination of the key and the information stored should

allow to determine if the cached value is valid.

ILcdAWTPath using an ILcdCache (from samples/gxy/hippodromePainter/GXYHippodromePainter)

private void retrieveAWTPathSFCT(IHippodrome aHippodrome, ILcdGXYContext aGXYContext,

ILcdAWTPath aAWTPathSFCT) {

// Do we have a cache and may it be used (defined by fPaintCache)

if (fPaintCache && aHippodrome instanceof ILcdCache) {

// Get the general path through the cache.

ILcdGeneralPath general_path = retrieveGeneralPathThroughCache(aGXYContext, aHippodrome);

// Convert it into an AWT path.

fTempAWTPath.reset();

fTempAWTPath.ensureCapacity(general_path.subPathLength(0));

// Appends the given path general_path (in world coordinates) to the given path aAWTPathSFCT

// (in AWT view coordinates), applying the given ILcdGXYViewXYWorldTransformation

aGXYContext.getGXYPen().appendGeneralPath(general_path,

aGXYContext.getGXYViewXYWorldTransformation(), aAWTPathSFCT);

} else {

// We don't have a cache.

// Compute the AWT path directly.

calculateContourAWTPathSFCT(aHippodrome, aGXYContext, aAWTPathSFCT);

}

}samples/gxy/hippodromePainter/GXYHippodromePainter)

private ILcdGeneralPath retrieveGeneralPathThroughCache(ILcdGXYContext aGXYContext,

IHippodrome aHippodrome) {

// Get the cache.

ILcdCache cacher = (ILcdCache) aHippodrome;

HippodromeCache cache = (HippodromeCache) cacher.getCachedObject(this);

// Make sure we have a cached path.

TLcdGeneralPath general_path = cache == null ? new TLcdGeneralPath() : cache.fGeneralPath;

ILcdXYWorldReference current_world_reference = aGXYContext.getGXYView().getXYWorldReference();

// Is the path valid?

if (cache == null || !cache.isValid(current_world_reference)) {

// Cached path not available or valid ==> update the path and cache it.

calculateContourGeneralPath(aHippodrome, aGXYContext, general_path);

// Trim it to take up as little space as possible.

general_path.trimToSize();

// Put it in the cache.

cache = new HippodromeCache(current_world_reference, general_path);

cacher.insertIntoCache(this, cache);

}

return general_path;

}Program: Retrieving an ILcdAWTPath using an ILcdCache and Program: caching!an ILcdGeneralPath show how an ILcdGeneralPath is often retrieved from the ILcdCache.

Program: Retrieving an ILcdAWTPath using an ILcdCache checks if a valid ILcdGeneralPath is available from the ILcdCache and transforms it into an ILcdAWTPath. Program: caching!an ILcdGeneralPath details how the ILcdCache

is kept up to date. The ILcdCache contains the world reference of the view and the ILcdGeneralPath. This is sufficient to check if the ILcdGeneralPath is valid, since the model reference of the IHippodrome will not change. Note that the layer is used as a key. In another layer the IHippodrome may be rendered using another ILcdGXYPainter for which the ILcdGeneralPath may be different (see for example Representations of shapes).

Rendering the ILcdAWTPath

Since ILcdAWTPath supports rendering itself as a polyline or a polygon on a Graphics, it is straightforward to enhance the hippodrome painter to fill the hippodrome. Program: Rendering an ILcdAWTPath on a Graphics demonstrates that once the ILcdAWTPath is constructed, rendering it in the view is quite simple. The method drawAWTPath provides two extra functionalities:

-

A parameter indicates if the axis or the outline of the

IHippodromeshould be rendered. -

Depending on whether the

IHippodromeshould be painted filled or not, a line style and a fill style are applied to theGraphics.

ILcdAWTPath on a Graphics (from samples/gxy/hippodromePainter/GXYHippodromePainter)

private void drawAWTPath(ILcdAWTPath aAWTPath,

Graphics aGraphics,

int aRenderingMode,

ILcdGXYContext aGXYContext,

boolean aDrawAxisPath) {

if (aDrawAxisPath) {

setupGraphicsForLine(aGraphics, aRenderingMode, aGXYContext);

aAWTPath.drawPolyline(aGraphics);

} else {

int paint_mode = retrievePaintMode(aRenderingMode);

switch (paint_mode) {

case FILLED: {

setupGraphicsForFill(aGraphics, aRenderingMode, aGXYContext);

aAWTPath.fillPolygon(aGraphics);

break;

}

case OUTLINED_FILLED: {

setupGraphicsForFill(aGraphics, aRenderingMode, aGXYContext);

aAWTPath.fillPolygon(aGraphics);

setupGraphicsForLine(aGraphics, aRenderingMode, aGXYContext);

aAWTPath.drawPolyline(aGraphics);

break;

}

case OUTLINED:

default: {

setupGraphicsForLine(aGraphics, aRenderingMode, aGXYContext);

aAWTPath.drawPolyline(aGraphics);

}

}

}

}Making sure the IHippodrome is visible in the view

As boundsSFCT explained, boundsSFCT should be implemented so that the ILcdGXYView can determine if the object should be rendered or not. To do so, it should return the view bounds of the object. ILcdAWTPath provides a method calculateAWTBoundsSFCT which does exactly this. Implementation of boundsSFCT is now straightforward: construct the ILcdAWTPath, be it from a cached ILcdGeneralPath or not, and call calculateAWTBoundsSFCT.

ILcdAWTPath (from samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public void boundsSFCT(Graphics aGraphics,

int aPainterMode,

ILcdGXYContext aGXYContext,

ILcd2DEditableBounds aBoundsSFCT) throws TLcdNoBoundsException {

// ...

calculateContourAWTPathSFCT(fHippodrome, aGXYContext, fTempAWTPath);

// use the calculated AWT-path to set the AWT-bounds

fTempAWTPath.calculateAWTBoundsSFCT(fTempAWTBounds);

aBoundsSFCT.move2D(fTempAWTBounds.x, fTempAWTBounds.y);

aBoundsSFCT.setWidth(fTempAWTBounds.width);

aBoundsSFCT.setHeight(fTempAWTBounds.height);

}Creating an IHippodrome

Creating a shape with TLcdGXYNewController2

Recalling from Creating an IHippodrome, the IHippodrome should be created in three steps.

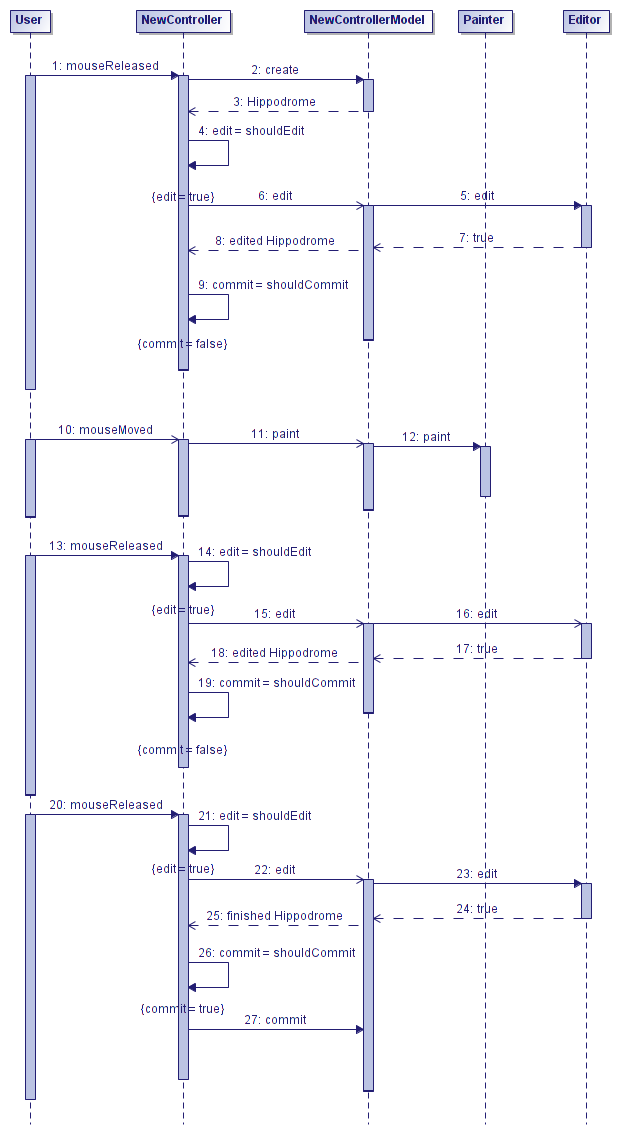

Figure 9, “Creating an object with three clicks” shows the sequence of calls made by the TLcdGXYNewController2 when a IHippodrome is created with three mouse clicks.

The new IHippodrome is constructed in the ALcdGXYNewControllerModel2 set

on the TLcdGXYNewController2. Once the object is constructed, its ILcdGXYPainter and ILcdGXYEditor are retrieved from the ILcdGXYLayer to which the IHippodrome will be added. It is important to note that neither the ILcdGXYPainter, nor the ILcdGXYEditor are responsible to construct the IHippodrome. The ILcdGXYPainter and ILcdGXYEditor only act on an IHippodrome constructed by the ALcdGXYNewControllerModel2.

All calls to the ILcdGXYEditor are the result of mouse clicks, whereas the calls to the ILcdGXYPainter are the result of the mouse moving over the ILcdGXYView. The ILcdGXYEditor returns true on the edit method to indicate that the object was indeed modified. This is the signal for the TLcdGXYNewController2 to proceed to the next step.

When creation is terminated, the ALcdGXYNewControllerModel2 selects the object in the ILcdGXYLayer where it was created, thus triggering a paint which includes the SELECTED mode.

Combining ILcdGXYPainter and ILcdGXYEditor

Implementing the creation of an IHippodrome requires implementing both the ILcdGXYPainter and ILcdGXYEditor interface. While the ILcdGXYEditor is responsible for the object creation, the ILcdGXYPainter presents a preview of what the ILcdGXYEditor would do when the input is finished. Combining the implementations of both interfaces in one class allows using the following

pattern to facilitate the preview:

-

create a class that implements both

ILcdGXYPainterandILcdGXYEditor -

implement a private method that contains the logic of creating and editing an

IHippodromeobject -

create a copy of the object set to the painter/editor

-

whenever a preview is needed, edit the copy of the object using the private edit method

-

calculate the

ILcdAWTPathof the copy -

render the preview using the

ILcdAWTPathof the copy -

implement the

editmethod by delegating to the private edit method

Since the editing logic is contained in one method, the preview presented by the ILcdGXYPainter is guaranteed to be identical to the result of the edit operation.

The structure of the paint method would be as in Program: Implementation of paint using the private edit method, resulting in an ILcdAWTPath for the partially created shape. Once the ILcdAWTPath is constructed, it can be rendered on the view as explained in Rendering the ILcdAWTPath. Note that only the axis of the IHippodrome is included in the ILcdAWTPath if the mouse has not been clicked twice. After the second click the contour

of the IHippodrome is rendered.

The structure of the edit method is illustrated in Program: Implementation of edit using the private edit method.

paint using the private edit method (from samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public void paint(Graphics aGraphics, int aPaintMode, ILcdGXYContext aGXYContext) {

// ...

if (

((aPaintMode & ILcdGXYPainter.CREATING) != 0))

{

copyHippodromeToTemp();

// edit this copy to apply the edited changes

// make sure to pass in ILcdGXYEditor-modes based on the received (as argument) paint-modes

privateEdit(

aGraphics,

fTempHippodrome,

aGXYContext,

(aPaintMode & ILcdGXYPainter.TRANSLATING) != 0 ? ILcdGXYEditor.TRANSLATED :

(aPaintMode & ILcdGXYPainter.CREATING) != 0 ? ILcdGXYEditor.CREATING :

ILcdGXYEditor.RESHAPED

);

IHippodrome hippodrome = fTempHippodrome;

if (

(((aPaintMode & ILcdGXYPainter.CREATING) != 0) &&

(!isNaN(hippodrome.getWidth())))

)

// Get the AWT path from the edited shape.

{

calculateContourAWTPathSFCT(hippodrome, aGXYContext, fTempAWTPath);

} else {

// only the axis needs to be drawn, so only create the bounds of the axis

calculateAxisAWTPathSFCT(hippodrome, aGXYContext, fTempAWTPath);

paint_axis = true;

}

drawAWTPath(fTempAWTPath, aGraphics, aPaintMode, aGXYContext, paint_axis);

}

}edit using the private edit method (from samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public boolean edit(Graphics aGraphics,

int aEditMode,

ILcdGXYContext aGXYContext) {

return privateEdit(aGraphics, fHippodrome, aGXYContext, aEditMode);

}Interpreting the input position

Program: Interpreting input movement when creating a IHippodrome shows how the position of the input point is used to determine the location

of the first turning point. The input point position and the transformations needed to transform it to model coordinates are

contained

in the ILcdGXYContext passed. The interpretation of setting the width of the IHippodrome, is illustrated in Program: Enabling changing the width in private edit in Implementing paint and edit for editing modes.

IHippodrome (from samples/gxy/hippodromePainter/GXYHippodromePainter)

private boolean privateEdit(Graphics aGraphics,

IHippodrome aHippodrome,

ILcdGXYContext aGXYContext,

int aEditMode) {

boolean shape_modified = false;

// ...

// retrieve the necessary transformations from the context.

ILcdModelXYWorldTransformation mwt = aGXYContext.getModelXYWorldTransformation();

ILcdGXYViewXYWorldTransformation vwt = aGXYContext.getGXYViewXYWorldTransformation();

if ((aEditMode & ILcdGXYEditor.START_CREATION) != 0

|| (aEditMode & ILcdGXYEditor.CREATING) != 0 &&

!isNaN(aHippodrome.getStartPoint().getX()) &&

isNaN(aHippodrome.getEndPoint().getX())) {

// move the temp. point to the input point coordinates.

fTempAWTPoint.move(aGXYContext.getX(), aGXYContext.getY());

// transform input point-position to model-coordinates

vwt.viewAWTPoint2worldSFCT(fTempAWTPoint, fTempXYWorldPoint);

mwt.worldPoint2modelSFCT(fTempXYWorldPoint, model_point);

if (((aEditMode & ILcdGXYEditor.START_CREATION) != 0)) {

// we just started creating aHippodrome,

// so place the start point of the aHippodrome on the input location

aHippodrome.moveReferencePoint(model_point, IHippodrome.START_POINT);

} else {

// we're busy creating the hippodrome and the start point has already been placed,

// so place the end point of tge aHippodrome on the input location

aHippodrome.moveReferencePoint(model_point, IHippodrome.END_POINT);

}

aShape_modified = true;

}Adapting the view bounds

The view bounds need to be adapted while creating the shape. When the IHippodrome is not fully defined the view bounds should contain at least the axis connecting both points. When the location of the two

turning points is defined, the mouse position should be interpreted as an indication of the width. Program: View bounds when creating a IHippodrome illustrates how this is taken into account when computing the bounds while creating a IHippodrome:

-

an

ILcdAWTPathis created based on the axis or the contour, depending on the number of input points -

the bounds of the

ILcdAWTPathare computed -

the computed bounds are copied to the bounds passed to the method

IHippodrome (from samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public void boundsSFCT(Graphics aGraphics,

int aPainterMode,

ILcdGXYContext aGXYContext,

ILcd2DEditableBounds aBoundsSFCT) throws TLcdNoBoundsException {

// ...

if ((aPainterMode & ILcdGXYPainter.CREATING) != 0) {

// when creating a hippodrome, the bounds are different depending on how far

// you are in the creation-process.

if (isNaN(fHippodrome.getEndPoint().getX())) {

// start point is set, now the endpoint needs to be set; in the meantime a line is

// drawn between the start point and the current input point-location, therefore calculating

// the bounds of this drawn line.

calculateAxisAWTPathSFCT(fHippodrome, aGXYContext, fTempAWTPath);

} else if (isNaN(fHippodrome.getWidth())) {

// start- and endpoint are set, now the width of the new hippodrome is being set;

// make a clone of the set hippodrome, as the set hippodrome isn't yet the complete

// (resulting) hippodrome

copyHippodromeToTemp();

boolean hippodrome_width_changed = privateEdit(

aGraphics,

fTempHippodrome,

aGXYContext,

ILcdGXYEditor.CREATING);

if (hippodrome_width_changed) {

calculateContourAWTPathSFCT(fTempHippodrome, aGXYContext, fTempAWTPath);

} else {

// the hippodrome could not be adapted, probably because

// the current location of the input point could not be transformed to model-coordinates.

// We just calculate the bounds of the axis

calculateAxisAWTPathSFCT(fTempHippodrome, aGXYContext, fTempAWTPath);

}

}

}

// use the calculated AWT-path to set the AWT-bounds

fTempAWTPath.calculateAWTBoundsSFCT(fTempAWTBounds);

aBoundsSFCT.move2D(fTempAWTBounds.x, fTempAWTBounds.y);

aBoundsSFCT.setWidth(fTempAWTBounds.width);

aBoundsSFCT.setHeight(fTempAWTBounds.height);

}Editing an IHippodrome

Deciding on the editing modes

Recalling from Editing an IHippodrome, the specifications for editing a hippodrome are the following: it should be possible to

-

move the hippodrome as a whole

-

move one of the two turning points

-

change the width of the hippodrome

The interface ILcdGXYEditor provides two different editing modes: TRANSLATED and RESHAPED, while three are needed according to the requirements. To fit the requirements, the IHippodrome ILcdGXYEditor is designed so that the TRANSLATED mode covers the first two, while the last one maps to the RESHAPED mode. To distinguish between the first two modes, the ILcdGXYEditor checks which part of the IHippodrome is touched by the input points: when one of the turning points is touched, that point will be moved. Otherwise, the shape

as a whole will be moved. This implies implementing a method more specific than isTouched, since the method should be able to indicate what part of the shape is being touched.

Moving the shape as a whole can only be implemented in function of the defining elements of the shape. Thus, moving the shape

as a whole over a distance means moving the turning points over that distance. This does not imply that the point at which

the input point touched the IHippodrome moves over the same distance. As such, it is possible that the IHippodrome moves relatively to the mouse pointer.

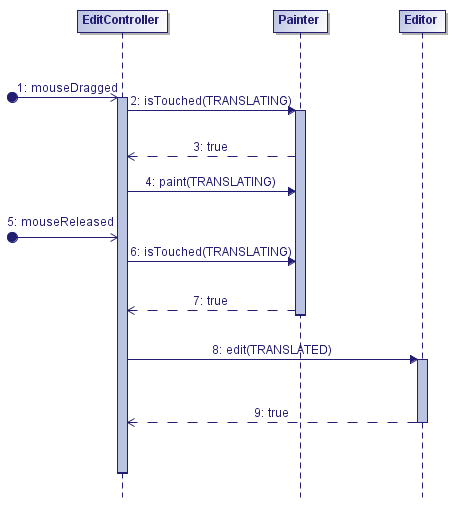

Editing a shape with TLcdGXYEditController2

Figure 10, “Editing an object with TLcdGXYEditController2” shows the typical sequence of calls to the ILcdGXYPainter and the ILcdGXYEditor when an IHippodrome is being translateds using the mouse. As long as the mouse is being dragged, the IHippodrome is painted in TRANSLATING mode by the ILcdGXYPainter. As soon as the mouse is released, the IHippodrome is adapted by the ILcdGXYEditor. The edit method returns true to confirm that the IHippodrome has been adapted.

Every call to the ILcdGXYPainter and to the ILcdGXYEditor is preceded by a check on whether the IHippodrome is being touched by the mouse pointer.

Note that the controller may still decide to call paint or edit operations, regardless of the outcome of the check.

An example edit operation without touching the object would be when the user is trying to move the currently selected objects.

Here the controller only requires one of the selection’s objects to be touched.

TLcdGXYEditController2Checking if an object is touched

The implementation of isTouched is crucial for editing since every interaction with the ILcdGXYPainter and the ILcdGXYEditor starts with one or more calls to this method.

Checking if a shape is touched while it is being edited is not possible, since the ILcdGXYPainter renders a preview of what the result of the editing operation would be, while the object itself is not being edited. The

implementation of isTouched therefore checks if the object was touched when the move of the input point(s) that initiated the editing started. This requires

the original location of the input points. The ILcdGXYContext passed to isTouched contains the current location of an input point (getX() and

getY()) and its displacement (getDeltaX() and getDeltaY()), from which the starting location can be derived. Program: Checking if a turning point is touched by the input point demonstrates how to check if one of the turning points of the hippodrome is touched by the input point while editing.

Checking the input point location at the start of the movement also imposes a requirement on the preview presented by the

ILcdGXYPainter. To obtain an intuitive user interaction the ILcdGXYPainter paint method should be implemented such that the input pointer continues touching the shape during the move of the input point(s).

If not, isTouched could return true, without visual confirmation of that status.

Program: Checking if the IHippodrome is touched illustrates how the isTouched method is implemented in the IHippodrome ILcdGXYPainter. It consists of calling a private method that returns which part of the IHippodrome, if any, was touched by the input point. That method, shown in Program: Checking what part of the IHippodrome is touched by the input point, checks for all parts of the representation of the IHippodrome if they are touched by the input point. Note that this may depend on the way the IHippodrome is rendered. If the IHippodrome is rendered filled, then the interior of the IHippodrome is also taken into account.

Finally, Program: Checking if a turning point is touched by the input point demonstrates how to check if one of the turning points of the IHippodrome is touched by the input point. Note that the coordinates of the position which is checked are aGXYContext.getX()-aGXYContext.getDeltaX() and aGXYContext.getY()-aGXYContext.getDeltaY(), which amounts to the location where the movement drag started.

IHippodrome is touched (from samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public boolean isTouched(Graphics aGraphics, int aPainterMode, ILcdGXYContext aGXYContext) {

// retrieve which part of the hippodrome is touched

int touchedStatus = retrieveTouchedStatus(aGXYContext, aPainterMode, fHippodrome);

// return true if a part is touched

return (touchedStatus != NOT_TOUCHED);

}IHippodrome is touched by the input point (from samples/gxy/hippodromePainter/GXYHippodromePainter)

private int retrieveTouchedStatus(ILcdGXYContext aGXYContext,

int aRenderingMode,

IHippodrome aHippodrome) {

boolean checkHandles = (aRenderingMode & ILcdGXYPainter.HANDLES) != 0;

boolean checkBody = (aRenderingMode & ILcdGXYPainter.BODY) != 0;

if (checkHandles) {

if (isPointTouched(aGXYContext, aHippodrome, START_POINT)) {

return START_POINT;

} else if (isPointTouched(aGXYContext, aHippodrome, END_POINT)) {

return END_POINT;

}

}

if (checkBody) {

if (isArcTouched(aGXYContext, aHippodrome, START_ARC)) {

return START_ARC;

} else if (isArcTouched(aGXYContext, aHippodrome, END_ARC)) {

return END_ARC;

} else if (isLineTouched(aGXYContext, aHippodrome, UPPER_LINE)) {

return UPPER_LINE;

} else if (isLineTouched(aGXYContext, aHippodrome, LOWER_LINE)) {

return LOWER_LINE;

} else if ((getMode() == FILLED || getMode() == OUTLINED_FILLED) ||

((aRenderingMode & ILcdGXYPainter.SELECTED) != 0 &&

(getSelectionMode() == FILLED || getSelectionMode() == OUTLINED_FILLED))) {

// retrieve (or calculate) an AWTPath for aHippodrome to be able to determine if the original

// input point-location lies within the aHippodrome

retrieveAWTPathSFCT(aHippodrome, aGXYContext, fTempAWTPath);

// take into account the original input point-location instead of the current

// ==> aGXYContext.getX() - aGXYContext.getDeltaX() as argument for polygonContains()

if (fTempAWTPath.polygonContains(aGXYContext.getX() - aGXYContext.getDeltaX(),

aGXYContext.getY() - aGXYContext.getDeltaY())) {

return INNER;

}

}

}

return NOT_TOUCHED;

}samples/gxy/hippodromePainter/GXYHippodromePainter)

private boolean isPointTouched(ILcdGXYContext aGXYContext,

IHippodrome aHippodrome,

int aHippodromePoint) {

ILcdGXYPen pen = aGXYContext.getGXYPen();

ILcdModelXYWorldTransformation mwt = aGXYContext.getModelXYWorldTransformation();

ILcdGXYViewXYWorldTransformation vwt = aGXYContext.getGXYViewXYWorldTransformation();

ILcdPoint point_to_check;

if (aHippodromePoint == START_POINT) {

point_to_check = aHippodrome.getStartPoint();

} else if (aHippodromePoint == END_POINT) {

point_to_check = aHippodrome.getEndPoint();

} else {

return false;

}

return pen.isTouched(

point_to_check,

aGXYContext.getX() - aGXYContext.getDeltaX(),

aGXYContext.getY() - aGXYContext.getDeltaY(),

getSensitivity(aGXYContext, pen.getHotPointSize()),

mwt,

vwt);

}

private int getSensitivity(ILcdGXYContext aGXYContext, int aDefaultValue) {

int sensitivity = aGXYContext.getSensitivity();

if (sensitivity >= 0) {

return sensitivity;

}

return aDefaultValue;

}Implementing paint and edit for editing modes

The paint and edit methods for the editing modes are exactly the same as their equivalents for the creation modes. The code in Program: Implementation of paint using the private edit method and Program: Implementation of edit using the private edit method can be reused.

Program: Enabling changing the width in private edit shows how privateEdit is extended to enable changing the width of the IHippodrome, based on the modes passed. Note that this code is also called at the end of the creation process.

The implementation for changing the width of the IHippodrome is shown in

Program: Changing the width of an IHippodrome:

-

the current position of the input point is retrieved

-

the distance to the axis is calculated

-

the result of the calculation is set as the new width of the

IHippodrome

Since calculation of the distance to the axis is different on a Cartesian plane than on an ellipsoid, the model reference

of the IHippodrome has to be retrieved from the ILcdGXYContext.

samples/gxy/hippodromePainter/GXYHippodromePainter)

private boolean privateEdit(Graphics aGraphics,

IHippodrome aHippodrome,

ILcdGXYContext aGXYContext,

int aEditMode) {

boolean shape_modified = false;

// ...

return shape_modified;

}

if ((aEditMode & ILcdGXYEditor.RESHAPED) != 0 && isContourTouched(aGXYContext, aHippodrome) ||

(aEditMode & ILcdGXYEditor.CREATING) != 0 && !isNaN(aHippodrome.getEndPoint().getX())) {

// when these modes are received, the user is busy changing or

// has just changed the width of aHippodrome

aShape_modified = changeWidth(aGXYContext, aHippodrome);

}IHippodrome (from samples/gxy/hippodromePainter/GXYHippodromePainter)

private boolean changeWidth(ILcdGXYContext aGXYContext,

IHippodrome aHippodrome) throws TLcdOutOfBoundsException {

boolean shape_modified;

ILcd3DEditablePoint model_point = aHippodrome.getStartPoint().cloneAs3DEditablePoint();

// move a temp. viewpoint to the current location of the input point

fTempAWTPoint.move(aGXYContext.getX(), aGXYContext.getY());

ILcdModelXYWorldTransformation mwt = aGXYContext.getModelXYWorldTransformation();

ILcdGXYViewXYWorldTransformation vwt = aGXYContext.getGXYViewXYWorldTransformation();

// transform input point-position to model-coordinates

vwt.viewAWTPoint2worldSFCT(fTempAWTPoint, fTempXYWorldPoint);

mwt.worldPoint2modelSFCT(fTempXYWorldPoint, model_point);

// calculate distance (in the model) from the input point-location to the axis of aHippodrome

double distance_to_axis;

ILcdModelReference model_reference = aGXYContext.getGXYLayer().getModel().getModelReference();

if (model_reference instanceof ILcdGeodeticReference) {

distance_to_axis = TLcdEllipsoidUtil.closestPointOnGeodesic(aHippodrome.getStartPoint(), aHippodrome.getEndPoint(), model_point, ((ILcdGeodeticReference) model_reference).getGeodeticDatum().getEllipsoid(), 1e-10,

1.0, new TLcdLonLatPoint());

} else {

// we assume we are computing in a plane

distance_to_axis = TLcdCartesian.closestPointOnLineSegment(aHippodrome.getStartPoint(), aHippodrome.getEndPoint(), model_point, new TLcdXYPoint());

}

// set this distance as new width of aHippodrome

aHippodrome.setWidth(distance_to_axis);

shape_modified = true;

return shape_modified;

}Taking care of view bounds

As explained in ILcdGXYPainter methods the ILcdGXYPainter should take into account changes to the object in boundsSFCT to ensure that it is rendered in the ILcdGXYView. Program: View bounds of an IHippodrome while editing illustrates how privateEdit facilitates implementing boundsSFCT.

IHippodrome while editing (from samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public void boundsSFCT(Graphics aGraphics,

int aPainterMode,

ILcdGXYContext aGXYContext,

ILcd2DEditableBounds aBoundsSFCT) throws TLcdNoBoundsException {

// ...

if (((aPainterMode & ILcdGXYPainter.TRANSLATING) != 0) ||

((aPainterMode & ILcdGXYPainter.RESHAPING) != 0)) {

// when changing the size or location of the original hippodrome, make a clone of it,

// so the original hippodrome is unchanged until the complete edit has changed

copyHippodromeToTemp();

// apply the editing changes, hereby transforming the painter-modes to editor-modes

privateEdit(

aGraphics,

fTempHippodrome,

aGXYContext,

(aPainterMode & ILcdGXYPainter.TRANSLATING) != 0 ? ILcdGXYEditor.TRANSLATED

: ILcdGXYEditor.RESHAPED);

// calculate the AWT-path

calculateContourAWTPathSFCT(fTempHippodrome, aGXYContext, fTempAWTPath);

}

// use the calculated AWT-path to set the AWT-bounds

fTempAWTPath.calculateAWTBoundsSFCT(fTempAWTBounds);

aBoundsSFCT.move2D(fTempAWTBounds.x, fTempAWTBounds.y);

aBoundsSFCT.setWidth(fTempAWTBounds.width);

aBoundsSFCT.setHeight(fTempAWTBounds.height);

}Snapping implemented

Specifications for snapping

The ILcdGXYPainter and ILcdGXYEditor for IHippodrome should support snapping in two ways:

-

snap the turning points of the

IHippodromeonto anyILcdPoint, and -

any other shape should be able to snap to either of the turning points.

snapTarget, snapping to an IHippodrome

Since snapping to either turning point should be supported, snapTarget should return the turning point that is being touched by an input point. If neither of the points is touched, it should return

null. This indicates that in the given ILcdGXYContext snapping to this IHippodrome is not possible. Program: Presenting the turning points as snap target illustrates how reuse of methods that check which part of the IHippodrome is touched simplifies retrieving the snap target.

samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public Object snapTarget(Graphics aGraphics,

ILcdGXYContext aGXYContext) {

Object snap_target = null;

// determine if the start point is touched,

if (isPointTouched(aGXYContext, fHippodrome, START_POINT)) {

snap_target = fHippodrome.getStartPoint();

}

// or the endpoint

else if (isPointTouched(aGXYContext, fHippodrome, END_POINT)) {

snap_target = fHippodrome.getEndPoint();

}

return snap_target;

}acceptSnapTarget, snapping of an IHippodrome

The ILcdGXYEditor may decide to reject snap targets. In this case study, see

Program: Accepting a point as snap target, any object that is not an

ILcdPoint is rejected. acceptSnapTarget

also checks if snapping to the snap target is possible and required.

If the snap target is an

ILcdPoint defined in another reference and it is on a location which

cannot be expressed in coordinates of the model reference in which the IHippodrome is defined, snapping is impossible.

If the outline of the IHippodrome was touched, and not one of the turning points, snapping is not required. This means that if a user moves the

IHippodrome as a whole, by dragging its outline, neither of the turning points will snap to another point even if they

are touching.

samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public boolean acceptSnapTarget(Graphics aGraphics,

ILcdGXYContext aGXYContext) {

Object snap_target = aGXYContext.getSnapTarget();

ILcdGXYLayer hippodrome_layer = aGXYContext.getGXYLayer();

ILcdGXYLayer snap_target_layer = aGXYContext.getSnapTargetLayer();

// we only snap to points

if (!(snap_target instanceof ILcdPoint)) {

return false;

}

// we do not snap to ourselves.

if (snap_target == fHippodrome.getStartPoint() || snap_target == fHippodrome.getEndPoint()) {

return false;

}

ILcdPoint snap_point = (ILcdPoint) snap_target;

// are the snap target and the hippodrome in the same layer?

boolean same_layer = (snap_target_layer == hippodrome_layer);

// or do they have the same model reference?

ILcdModel snap_target_model = snap_target_layer.getModel();

ILcdModel hippodrome_model = hippodrome_layer.getModel();

boolean same_model_ref = same_layer ||

((hippodrome_model.getModelReference().equals(snap_target_model.getModelReference())));

boolean transformation_supported = false;

if (!same_model_ref && (fModelReference2ModelReference != null)) {

fModelReference2ModelReference.setSourceReference(snap_target_model.getModelReference());

fModelReference2ModelReference.setDestinationReference(hippodrome_model.getModelReference());

try {

// retrieve the location of the snap point in the hippodromes reference.

fModelReference2ModelReference.sourcePoint2destinationSFCT(snap_point, fTempModelPoint);

// no exception occurred, so compatible references

transformation_supported = true;

} catch (TLcdOutOfBoundsException ex) {

// different model reference and the transformation does not support transforming

// from one to the other, so we reject the snap target.

return false;

}

}

// was one of the handles of the hippodrome touched by the input point?

int touched_status = retrieveTouchedStatus(aGXYContext, ILcdGXYPainter.HANDLES, fHippodrome);

// are we creating the start or end point?

boolean creating = isNaN(fHippodrome.getEndPoint().getX());

// or are we editing the start or end point of an existing hippodrome?

boolean editing = !isNaN(fHippodrome.getWidth()) && touched_status != NOT_TOUCHED;

// only accept the target if the transformation allows transforming to it,

// and if we are dragging or creating one of the points,

return (same_layer || same_model_ref || transformation_supported) &&

(creating || editing);

}paint, highlighting the snap target

The method paint provides a visual indication that a snap target is available using the SNAPS mode. Program: Highlighting a snap target illustrates that

highlighting the snap target with an icon suffices, since the snap target returned for an IHippodrome is always an ILcdPoint.

samples/gxy/hippodromePainter/GXYHippodromePainter)

@Override

public void paint(Graphics aGraphics, int aPaintMode, ILcdGXYContext aGXYContext) {

// ...

if ((aPaintMode & ILcdGXYPainter.SNAPS) != 0) {

// when requested to draw a snap target of the hippodrome, we first need to know which

// point was returned as snap target, so we can highlight it with the snap icon.

ILcdPoint snap_target = (ILcdPoint) snapTarget(aGraphics, aGXYContext);

if (snap_target != null) {

try {

pen.moveTo(snap_target, mwt, vwt);

int x = pen.getX();

int y = pen.getY();

fSnapIcon.paintIcon(null,

aGraphics,

x - (fSnapIcon.getIconWidth() / 2),

y - (fSnapIcon.getIconHeight() / 2));

} catch (TLcdOutOfBoundsException e) {

// we don't draw the snap target since it is not visible in this world reference.

// this will actually never happen.

}

}

}

}edit, snapping to a snap target

The method edit should be implemented so that if a snap target is present in the ILcdGXYContext and if it is accepted, the snap operation is executed: one of the turning points should be moved to the snap target.

Program: Snapping in the private edit method shows how the private edit method, which contains the editing logic, is extended to support snapping of the IHippodrome to an ILcdPoint.

Program: Snapping to a snap target demonstrates how the snapping is implemented, taking into account that the snap target may be defined in another reference.

samples/gxy/hippodromePainter/GXYHippodromePainter)

private boolean privateEdit(Graphics aGraphics,

IHippodrome aHippodrome,

ILcdGXYContext aGXYContext,

int aEditMode) {

boolean shape_modified = false;

// ...

return shape_modified;

}

if ((aEditMode & ILcdGXYEditor.TRANSLATED) != 0 &&

(aGXYContext.getDeltaX() != 0 || aGXYContext.getDeltaY() != 0)) {

// We were/are translating the shape (depending on whether it was called from paint() or edit()).

// apply different changes to aHippodrome based on input positions and transformations.

// determine which part has been changed

switch (retrieveTouchedStatus(aGXYContext, convertToRenderingMode(aEditMode), aHippodrome)) {

case START_POINT:

// the location of the start point is (being) changed,

// check if it can be snapped to a snap-target

if (!(aShape_modified = linkToSnapTarget(aHippodrome, aGraphics,

aEditMode, aGXYContext))) {

// ...

break;

case END_POINT:

// the location of the endpoint is (being) changed,

// check if it can be snapped to a snap-target

if (!(aShape_modified = linkToSnapTarget(aHippodrome, aGraphics,

aEditMode, aGXYContext))) {

// ...

}

break;

}

}samples/gxy/hippodromePainter/GXYHippodromePainter)

private boolean linkToSnapTarget(IHippodrome aHippodrome,

Graphics aGraphics,

int aEditMode,

ILcdGXYContext aGXYContext) {

boolean snapped_to_target = false;

// test if the snap target returned by aGXYContext can be accepted by aHippodrome; in other words,

// can aHippodrome snap to it

if (acceptSnapTarget(aGraphics, aGXYContext)) {

// if it is possible, retrieve which reference-point is touched, so we know which

// point needs to be moved to that snap-target

ILcdPoint snap_point = (ILcdPoint) aGXYContext.getSnapTarget();

ILcdGXYLayer hippodrome_layer = aGXYContext.getGXYLayer();

ILcdGXYLayer snap_layer = aGXYContext.getSnapTargetLayer();

// which point is touched ? acceptSnapTarget already ensures that either

// the start or the end point is touched.

// Since checking whether an object is touched is a functionality of the painter,

// the edit mode has to be converted to a rendering mode.

int renderingMode = convertToRenderingMode(aEditMode);

int touched_status = retrieveTouchedStatus(aGXYContext, renderingMode, aHippodrome);

int point_to_move = IHippodrome.START_POINT;

if (touched_status == END_POINT) {

point_to_move = IHippodrome.END_POINT;

}

// if the snap target is in the same layer or has the same model-references, just move the point

// of the hippodrome

if ((snap_layer == hippodrome_layer) ||

(snap_layer.getModel().getModelReference().equals(

hippodrome_layer.getModel().getModelReference()))) {

aHippodrome.moveReferencePoint(snap_point, point_to_move);

snapped_to_target = true;

} else {

try {

// acceptSnapTarget is called, so the transformation is setup correctly.

// fTempModelPoint is moved to the location of the snap_point

fModelReference2ModelReference.setDestinationReference(

snap_layer.getModel().getModelReference());

fModelReference2ModelReference.setSourceReference(

hippodrome_layer.getModel().getModelReference());

fModelReference2ModelReference.destinationPoint2sourceSFCT(snap_point, fTempModelPoint);

// move the touched reference-point to the snap-target

aHippodrome.moveReferencePoint(fTempModelPoint, point_to_move);

snapped_to_target = true;

} catch (TLcdOutOfBoundsException e) {

snapped_to_target = false;

}

}

}

return snapped_to_target;

}