As a developer or system administrator that deploys Rich Internet Applications (RIA), you want to ensure a fast and responsive user experience. This guide discusses several development and deployment strategies aimed at enhancing application performance. It addresses common bottlenecks that affect performance, such as file download time, and feature decoding and rendering.

-

Data Preparation shows how careful data preparation positively affects data size and performance.

-

Data Caching highlights your caching options when you exchange data in a web application context.

-

Serving data discusses how server-side technologies and configuration settings can speed up download times and reduce network load.

-

LuciadRIA API Features illustrates specific features of LuciadRIA that help you design responsive maps for the visualization of large amounts of data.

Data Preparation

Application-driven data tuning

For web applications, try to model your data in a way that is tailored to your application. This means that you strip all redundant information from the source data. Removing unnecessary information can significantly reduce the amount of data exchanged between the server and the web browser.

Offline processing

You can pre-process data that does not change often offline and update it at regular intervals.

Both the LuciadFusion product and JavaScript offer data processing capabilities:

- Using LuciadFusion

-

The

com.luciad.datamodelpackage contains functions for processing GIS data, in particular for data decoded through Luciad models. For more details about using LuciadFusion for data modeling, see the LuciadFusion documentation. - Using JavaScript

-

JavaScript is an excellent scripting language for quickly processing data stored in text files, particularly in formats like JSON. With JavaScript runtime environments like node.js or Rhino, you can run these scripts outside the browser.

Online processing

For data that changes frequently, it is often more convenient to process the data on-the-fly. Typically, a web service acts as an intermediary between the data source, files on the file system or a database for example, and the client web application. This middle tier is a good place to optimize the data before sending it over the network.

- OGC Web Feature Service (WFS)

-

The OGC WFS is an example of a web service with a protocol that supports the filtering of data. Clients can use the

propertyNameparameter to limit the properties returned from the web service. See the documentation of theluciad.model.store.WFSFeatureStoremodule to find out how to set that up with LuciadRIA. - Custom web service

-

When you implement a custom web service, you may want to support a similar filtering functionality. LuciadRIA includes a gazetteer web service in the samples that supports such property filtering. See Program: The gazetteer web service only retrieves the

featureNameandfeatureClassproperty for an example.Program: The gazetteer web service only retrieves thefeatureNameandfeatureClassproperty (fromsamples/cop/components/GazetteerControl.tsx)class GazetteerQueryProvider extends QueryProvider { private readonly _type: string; constructor(type: string) { super(); this._type = type; } getQueryLevelScales(layer: FeatureLayer, map: Map) { return []; }; getQueryForLevel(level: number) { return { "class": this._type, fields: "featureName,featureClass,countyName", limit: 500, minX: BOUNDS.x, maxX: BOUNDS.x + BOUNDS.width, minY: BOUNDS.y, maxY: BOUNDS.y + BOUNDS.height }; }; }

Choosing a data transport format

The choice of data format has a direct impact on the size of the server response. XML-based formats such as GML are verbose: the required opening and closing tags add a lot of meta-text. That’s why they are not ideally suited to support RIA apps.

JSON-based formats, such as GeoJSON, provide a better trade-off between file size and human readability, which comes in handy during development and debugging. Other, more customized formats are also possible, CSV for example. They might even result in smaller file sizes.

|

Keep in mind that most data compression algorithms already do a fine job of removing inherent redundancies from the data, and can already reduce file size significantly. For the details, see Compressing data. That is why careful data modeling and web caching setup may be a better use of your time than the development of custom or proprietary data formats. A good data model and web cache setup typically yield a better result for the reduction of network traffic and the improvement of the user experience. For more information about data modeling and web caching, see Application-driven data tuning and Data Caching respectively. |

Data Caching

You can significantly increase download performance by setting up web caches that bring the data closer to your users. You can set up such web caches by appropriately configuring the supporting system infrastructure.

The HTTP protocol describes a standard means of exchanging information about the freshness and validity of resources, also referred to as 'representations'. The information is exchanged in the request and response headers between client and server.

Servers can add HTTP headers to their responses with information about the expiration characteristics of the representation they are sending back to a client. From their end, clients can add conditional statements to their requests to instruct servers to only return a representation if it satisfies certain conditions. These are predicates about the validity of a representation.

By combining these capabilities, web cache infrastructures can duplicate representations on multiple nodes on the network. As a result, lag is reduced and the end user experience improves, while it is guaranteed that users will not receive stale information. The remainder of this section highlights the caching mechanisms supported by the HTTP protocol, and therefore by the browsers and servers that implement the HTTP specification, in Choosing a web caching strategy. Caching locations outlines at which network locations caching can occur.

Choosing a web caching strategy

This section gives a high level overview of the function of the cache control header, as well as the web caching mechanisms as dictated by the HTTP 1.1 specification. Caching is a fairly complex area of expertise, so this overview is by no means complete. For a detailed description, please refer to the specification.

The HTTP specification mentions two types of caching mechanisms: expiration-based caching and validation-based caching. These caching models are controlled by a number of HTTP headers in HTTP requests as well as responses. Web servers typically allow administrators to configure caching according to the specification. Contemporary web browsers adhere to the directives in these headers.

Use the cache control header

Regardless of the caching mechanism used, the server can dictate caching behavior to the client using the cache control HTTP header. When clients cache representations, they must obey the cache control instructions given by the server.

Expiration-based caching

In the expiration-based caching model, the server associates an expiration date with a data representation that it is hosting.

This caching model is usually preferred, because no communication takes place between the client and the server as long as

a representation is still valid.

The server can set the Expires header to tell the client that caches the representation how long it should consider the representation fresh.

As long as the representation has not expired, the user agent can use the local copy.

Validation-based caching

A second model of caching is validation-based caching. Validation can be based on time-related criteria, or can be based on entity tags. In both cases, the client contacts the server to verify if its local representation is still valid.

-

In the case of time-based validation caching, the client contacts the server to verify if its cache copy is still valid: it sends a request for the resource with the date it has last retrieved that resource. If the representation has not been modified on the server since the specified time, the server can respond with

304 Not Modified. This is known as a conditional GET. Although the server is contacted, the amount of exchanged data is kept to a minimum. -

In the case of entity-tag-based validation caching, the server associates entity tags, or

ETag, with a resource. EachETagmarks the resource for a particular time frame. When the server sends this representation to a client, it sends along this tag. At later times, to verify whether the client is still caching a valid copy of the resource, the client sends a conditional GET request to the server with this ETag. If the tag matches, the server will reply with a304 Not Modifiedresponse, or with an updated representation.

Caching locations

Caching typically happens in two locations: in the web browser at the client side, and in the network.

Caching in the browser

All current web browsers cache data locally. Web browsers implement the HTTP caching mechanisms and store data in the local file system. If your server annotates representations with appropriate cache headers, each user of your web app will immediately benefit from performance improvements when making repeated visits to your website.

The actual cache policies and the possible caching configuration options, such as cache size, vary between browsers.

Caching on the network

A good way of increasing performance is duplicating the data on nodes in the network — as close to the user as possible. This is usually a task for system administrators. No specific development with LuciadRIA is required to benefit from the performance gains.

To duplicate the data on network nodes, system administrators can use:

- Proxies

-

Function much like browser caches, and sit as an intermediary between the user and the server. Local Area network (LAN) system administrators often deploy proxies to reduce network traffic and improve latency. Most users on a LAN connect to the internet through a proxy in fact. The benefit of proxies is that they can return cached representations to multiple users concurrently.

- Reverse proxies

-

Are deployed by a web server administrator, and are primarily designed to reduce the load on the origin server, thereby improving performance. They intercept incoming requests, and either return a representation from the cache, or propagate the request to one or - in the case of load balancing - more origin servers. Users are generally not aware they are connecting to a reverse proxy.

Serving data

Web administrators can also improve performance by enabling data compression, as explained in Compressing data, and maintaining a persistent connection on their web servers, as explained Using persistent connections.

Compressing data

Most web servers support the use of data compression before a response is sent to the client.

Gzip is an example of a widely supported compressed data format.

In HTTP 1.1, clients can inform the server they know how to handle gzipped responses. Servers can then optionally compress their response in the gzip format, because they are certain that the response message will be understood. By default, all modern browsers are gzip-enabled.

Advantages

| file name | human readable | minified | human readable (gzip) | minified (gzip) | uncompressed | gzip |

|---|---|---|---|---|---|---|

|

cities.json |

38.1 KB |

26.2 KB |

5 KB |

5 KB |

38.1 KB |

5 KB |

|

world.json |

589 KB |

247. KB |

117 KB |

99 KB |

236 KB |

98 KB |

|

worldLarge.json |

2.6 MB |

1.1 MB |

460 KB |

340 KB |

1 MB |

389 KB |

-

It is format-agnostic. It is therefore an excellent general-purpose method to reduce network load.

-

Gzip generally deals well with the removal of innate redundancy from a response. For example, the recurrence of property names or excess whitespace in JSON is dealt with efficiently.

Disadvantages

| Firefox 20 | Chrome 36 | Internet Explorer 10 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

GbE |

DSL |

GbE |

DSL |

GbE |

DSL |

|||||||

|

filename |

text |

zip |

text |

zip |

text |

zip |

text |

zip |

text |

zip |

text |

zip |

|

cities.json |

15 |

17 |

2137 |

1296 |

4 |

4 |

1131 |

294 |

4 |

5 |

1121 |

285 |

|

worldLarge.json |

136 |

173 |

51859 |

16037 |

35 |

112 |

51486 |

16035 |

264 |

307 |

51395 |

16031 |

-

Reduction in file size is not guaranteed, especially for source files smaller than 10kB, or for data with a very high information density. To deal with such issues, web server products usually allow you to configure a minimum file size to gzip responses.

Table Table 1, “Gzip comparison” illustrates the effects of data compression for different browsers, on data that is formatted in GeoJSON and CSV. The impact of the data format on the data size is minimal when data is compressed. See Choosing a data transport format for more information about choosing a data transport format.

In the case of GeoJSON , compression is slightly better when the data file was minified first. During minification, all unnecessary formatting is removed and all data is concatenated to a single line of text. Although this is highly data-dependent, the GeoJSON representation even compresses better than its CSV equivalent in the case of the large data file

worldLarge. -

There is a performance impact on the server because the message needs to be compressed, and on the client, because the message needs to be decompressed. Hence, the decision to use data compression will depend on the nature of the environment in which the web application will be consumed. On networks with high latency, the internet for example, enabling data compression on your web server or on a reverse proxy will yield better results, despite the added performance hits for compression and decompression.

This is shown in Table Table 2, “Ajax download times in milliseconds for varying compression and network bandwidths”. On a Gigabit Ethernet network, gzipping the data files before transmitting them results in a slower download. Only in the case of slower network links (a DSL line), gzipping results in a faster download, because the download time is significantly larger than the overhead of compressing and decompressing the data.

Using persistent connections

Persistent connections are a feature of the HTTP protocol. It allows clients and server to maintain a TCP/IP connection, even after the end of a request/response cycle.

Browsers use it to keep a connection open to a server (keep-alive), since a few seconds is generally sufficient to retrieve most resources required for a web page over that single connection.

On the other hand, servers can signal to a client that they should close a connection.

Advantages

Download performance improves because client and server do not need reopen and close the connection for every single request. Since most web server products are configured out-of-the-box to respect these keep-alive requests from the client, little additional configuration is required to benefit from this performance improvement.

Disadvantages

On high-bandwidth networks, or for applications with large user bases, it might be beneficial to turn off persistent connections. If there are many simultaneous requests, a server can run out of resources to handle all these concurrent connections.

Web server products allow administrators to configure how requests for a persistent connection from a client are handled: either by turning on and off persistent connections globally, or by setting some threshold for the maximal number of persistent connections.

LuciadRIA API Features

The LuciadRIA API offers tools and strategies that allow you to build maps that remain responsive while visualizing large amounts of data.

Intelligent data retrieval and painting

Features in a large datasets are often part of a natural hierarchy:

-

A collection of roads ranging from highways and state roads to local byways

-

A collection of world airports, ranging from large, global transportation hubs to small, recreational airfields

-

A collection of streams ranging from major rivers to small creeks

In such datasets, it is usually not desirable to visualize all features with the same style. Especially when you are dealing with many features, such an approach would not just harm the legibility of the map, but also its performance.

Tuning the visualization

When you are implementing a view/feature/FeaturePainter, you can make use of such hierarchies in the data, by visualizing a feature differently depending on the scale level.

You do this by implementing the getDetailLevelScales() function of a Painter, so that it returns a list of the scales at which you want the representation of features to change.

This is illustrated by Program: Returning a list of scale range delimiters..

The painter defines two scale ranges for the painter.

The scale separation is configured at scale 1/37800 , which means that 1 centimeter on the map equals 378 meters in the real

world.

samples/cop/themes/BlueForcesPainterFallback.ts)

getDetailLevelScales() {

return [1 / 37800];

}Retrieving data based on scale

Similar to tuning the visualization based on scale with a FeaturePainter, you can also tune the data retrieval from the server, by specifying how and when the FeatureLayer queries its FeatureModel.

For example, it is not necessary to retrieve highly detailed data from the server if the user has completely zoomed out the

map.

A QueryProvider allows you to filter data based on scale, so that only relevant data is requested for a particular scale.

See Dealing with large feature data sets for more information and an example.

Retrieving data based on extent

You can optimize performance by limiting the retrieval of model data to only what is visible in the view.

To achieve this, configure the FeatureLayer with the LoadSpatially data loading strategy.

This strategy ensures that only the data falling within the current view bounds is requested from the model.

This approach is especially useful when working with web services that support data retrieval using a bounding box.

The importance of stable Feature IDs

For best performance, the data service that the LoadSpatially strategy communicates with should return features with consistent and stable IDs.

Stable Feature IDs are critical in LuciadRIA as they allow the framework to efficiently update the view as you navigate the

map.

LuciadRIA can avoid (re)styling features that are already on the map simply by checking Feature IDs that are already in the

WorkingSet.

If the service does not provide intrinsic IDs, ensure that a mechanism is in place to generate a unique and stable ID for

each feature.

For example, see the idProvider option in GeoJsonCodecConstructorOptions.

Simplifying representations

Simplifying the representation of a feature at smaller scales is useful when the actual representation is quite complex or performance-intensive. In Program: Change the representation of the icon based on the scale level. , for example, different icons are displayed at different scale levels. A simple, round icon is used for the first scale level of 0, when the map is zoomed out. For the second scale level of 1, with the map zoomed in, a larger, rectangular icon is used.

samples/cop/themes/BlueForcesPainterFallback.ts)

paintBody(geoCanvas: GeoCanvas, feature: Feature, shape: Shape, layer: Layer, map: Map, state: PaintState) {

if (state.level === 0) {

if (state.selected) {

geoCanvas.drawIcon(shape, {

image: smallRedCircle

});

} else {

geoCanvas.drawIcon(shape, {

image: smallBlueCircle

});

}

} else {

if (state.selected) {

geoCanvas.drawIcon(shape, {

image: bigRedRectangle

});

} else {

geoCanvas.drawIcon(shape, {

image: bigBlueRectangle

});

}

}

}Omitting features

Omitting the representation of a feature in function of the scale level is useful when the feature is too small or irrelevant

at certain scales.

In Program: paintBody implementation of a painter. Features are omitted based on scale level., features are skipped based on scale level.

On small scales, only large roads such as interstates, are painted.

At larger scales, all roads are painted.

paintBody implementation of a painter. Features are omitted based on scale level.

const selectedColor = 'rgb(255,0,0)';

const painter = new FeaturePainter();

painter.paintBody = function(geoCanvas, feature, shape, layer, map, state) {

const name = (feature.properties as any).NAME;

if (state.level >= 0 && name.indexOf('Interstate') >= 0) {

const interstateLineWidths = [1, 1.3, 1.9, 1.9, 3.2, 5.0];

geoCanvas.drawShape(shape, {

stroke: {

width: interstateLineWidths[state.level],

color: state.selected ? selectedColor : 'rgb(177, 122, 70)'

},

zOrder: 4

});

geoCanvas.drawShape(shape, {

stroke: {

width: interstateLineWidths[state.level],

color: state.selected ? selectedColor : 'rgb(255, 175, 100)'

},

zOrder: 5

});

}

if (state.level >= 1 && (name.indexOf('US Route') >= 0)) {

const usRouteLineWidths = [0, 1, 1.3, 1.9, 3.2, 5.0];

geoCanvas.drawShape(shape, {

stroke: {

width: usRouteLineWidths[state.level],

color: state.selected ? selectedColor : 'rgb(177, 161, 84)'

},

zOrder: 2

});

geoCanvas.drawShape(shape, {

stroke: {

width: usRouteLineWidths[state.level],

color: state.selected ? selectedColor : 'rgb(255, 230, 120)'

},

zOrder: 3

});

}

if (state.level >= 2 && (name.indexOf('State Route') >= 0)) {

const stateRouteLineWidths = [0, 0, 1, 1.3, 2.5, 5.0];

geoCanvas.drawShape(shape, {

stroke: {

width: stateRouteLineWidths[state.level],

color: state.selected ? selectedColor : 'rgb(177, 177, 140)'

},

zOrder: 0

});

geoCanvas.drawShape(shape, {

stroke: {

width: stateRouteLineWidths[state.level],

color: state.selected ? selectedColor : 'rgb(255, 255, 200)'

},

zOrder: 1

});

}

};Divide and conquer

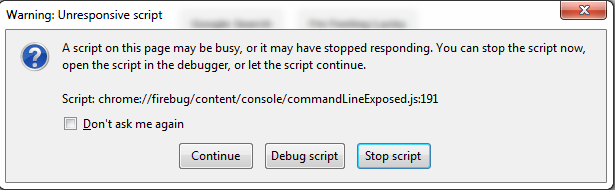

JavaScript execution in browsers is single-threaded. A possible consequence is that the browser appears to "hang" momentarily when a script runs for a long time. This happens because user input such as mouse movements or keyboard strokes are not being processed. Browsers may even display a slow script warning, as in Figure 1, “Slow script warning in Chrome”, prompting users to stop the execution of the script. Whether such a warning pops up or not is highly dependent on the environment the application is running in: for example, whether the website runs on a desktop or mobile, how many other applications are running on the machine, or how many tabs are open.

A cross-browser-compatible way to prevent long-running computations is to execute heavyweight processes in small blocks. Since large data sets require a lot of client-side processing, LuciadRIA offers developers two capabilities to enable such incremental processing:

-

Incremental decoding

-

Incremental rendering

Both techniques are useful to finetune an application, but there is a trade-off involved. Because processing is spread out over time, in favor of responsiveness, up-front load times of data increase.

Incremental decoding

Instead of decoding all the features at once when a model is queried, the features are decoded in small batches. This happens automatically; no extra configuration is required.

Incremental rendering

The first time a layer renders a feature, the feature is projected and discretized into a geodetically correct representation. If a large number of features or complex features must be rendered on the screen, the calculation of the screen representation becomes very time-consuming.

Layers can be configured to render their contents to the screen incrementally.

This prevents slow script warnings.

Use the incrementalRendering option when constructing the FeatureLayer.

Caching versus computation

Just like pre-processing data on the server-side, as discussed in Offline processing, the caching of computed values on the client is a simple technique to improve performance.

Such caching is relevant in implementations of view/feature/Painter, for example, because they are called often.

It is nevertheless a good general-purpose technique that you can use in other parts of the application as well.

In Program: Computing an icon only once and re-using the result, the city icon is computed only once, and not re-computed for each feature.

The computation result is re-used each time an icon with the same dimensions is requested.

When caching computed values, you must always consider the trade-off between memory consumption and speed. Caching computed values will speed up your application, but will also increase its memory consumption. This is especially relevant in mobile web applications, where applications can easily run out of memory. A mobile web browser that suddenly terminates without warning is often an indication of memory problems.

toolbox/ria/core/util/LayerFactory.ts)

paintBody(geoCanvas: GeoCanvas, feature: Feature, shape: Shape, layer: Layer, map: Map, state: PaintState): void {

let cssPixelSize = this.getIconSize(feature);

if (state.hovered) {

cssPixelSize *= 1.33;

}

const devicePixelSize = cssPixelSize * map.displayScale;

const hash = devicePixelSize.toString() + state.selected + state.hovered;

let icon = this._cache[hash];

if (!icon) {

let stroke = "rgba(255,255,255,1.0)";

let fill = "rgba(255,0,0,0.5)";

let strokeWidth = 2 * map.displayScale;

if (state.selected) {

stroke = "rgba(0,0,255,1.0)";

fill = "rgba(255,0,255,0.7)";

strokeWidth = 3 * map.displayScale;

}

icon = createCircle({

stroke,

fill,

strokeWidth,

width: devicePixelSize,

height: devicePixelSize,

});

this._cache[hash] = icon;

}

geoCanvas.drawIcon(shape.focusPoint!, {

width: `${cssPixelSize}px`,

height: `${cssPixelSize}px`,

image: icon

});

}